- Print

- PDF

Error Messages

- Print

- PDF

This page explains the causes and solutions for error messages that appear in TROCCO.

Error messages addressed in this article

This page introduces error messages that appear in the log below.

- preview error log

- run error log

- execution log

Data Destination BigQuery

Errors that can occur when connecting to BigQuery

org.embulk.exec.PartialExecutionException: java.net.SocketException: Connection or outbound has closed

Possible Causes

This is a rare error that occurs when TROCCO connects to Data Destination BigQuery due to a network breakdown.

In most cases, the error can be resolved by rerunning the job.

cope

Errors can be avoided by adding a retry setting to the ETL Configuration or Workflow definition.

- For ETL Configuration:

- In the Job Setting tab of ETL Configuration STEP 2, set the maximum number of retries to 1 or more.

- For Workflow definition:

- Set the number of retries in Job Setting to 1 or more.

Error when submitting JSON nested columns to BigQuery

Field <column name> is type RECORD but has no schema.

Possible Causes

This error occurs when a column Setting as JSON type in TROCCO is transferred as RECORD type.

Specifically, errors occur in the following situations

- In the Data Settings tab of ETL Configuration STEP2 , Column Setting , set the columns with json as the Data Type.

- ETL Configuration is attempted with "RECORD" set as Data Setting in****the Column Setting of the Output Option tab in STEP 2 of the Transfer Settings.

cope

This can be handled by creating a table on BigQuery that is treated as a template in advance.

- On the BigQuery side, create a table with the same schema as the table you want to transfer this time under the Data Destination data set.

- In TROCCO, on the Output Option tab of ETL Configuration STEP 2, in the Table to Refer to Schema Information as Template, enter the table name created in the previous step.

Data Destination kintone

Error when transferring a column of a data type not supported by the Data Destination table

Caused by: com.kintone.client.exception.KintoneApiRuntimeException: HTTP error status 400, {"code":"CB_IJ01","id":"<ID>","message":"Illegal JSON string. "}

Possible Causes

This error occurs when the Data Type of the Column Setting in TROCCO does not correspond to the Data Type defined in the table in Data Destination kintone.

Note that the "code": included in the error message above."CB_IJ01"is output when the value (JSON formed by TROCCO) is incorrect for the Data Destination table.

cope

Please set a different Data Type in the Column Setting of the Output Option tab of ETL Configuration STEP 2.

Data Source BigQuery

Error when referencing Google Sheets as an external table

bigquery job failed: Access Denied: BigQuery BigQuery: Permission denied while getting Drive credential.

Possible Causes

This error occurs when the BigQuery table from which data is retrieved references Google Sheets as an external table.

Currently, TROCCO's Data Source BigQuery does not support the transfer of tables that reference Google Sheets as an external table.

cope

On BigQuery, create a table that does not reference an external table and set that table as the Data Setting source table.

Data Source MySQL

Error when a connection to MySQL cannot be established

Error: java.lang.RuntimeException: CommunicationsException: com.mysql.jdbc.exceptions.jdbc4. Communications link failure

Possible Causes

This error occurs when a connection with MySQL cannot be established.

Specifically, the following cases may be considered

- The storage capacity on the MySQL side is FULL, and it keeps restarting.

- No response from the MySQL side within the timeout setting value.

- This phenomenon may occur when changes are made to MySQL, such as version upgrades.

cope

- First, check the startup status of the MySQL side.

- If there is no problem with the MySQL startup status, do one of the following

- In the Input Option tab of STEP 2 of TROCCO's ETL Configuration, extend the value of the Socket Timeout.

- Extend the value of MySQL's net_read_timeout.

Error when unable to establish SSH/SSM connection

An error occurred during SSH connection. Please check your settings. Net::SSH::Proxy::ConnectError:. Failed to connect to your bastion host. Please check your SSM configuration.

Possible Causes

This error is caused by MySQL or SSH or SSM connection environment.

cope

Please take one of the following actions

- Check the number of simultaneous sshd connections, logs (

/var/log/secure), etc., and you may find the problematic area.

Re-run the job after raising the limit on the number of simultaneous connections for sshd. - This may be due to a temporary environmental problem, so please take some time to re-run the program.

If the problem still occurs frequently, please add a retry setting in "Job Settings" in STEP 2 of ETL Configuration in TROCCO.

Data Source Redshift

Error when Data Source exceeds 1000 rows

Fetch size 10000 exceeds the limit of 1000 for a single node configuration. Reduce the client fetch/cache size or upgrade to a multi node installation

Possible Causes

Due to the restriction in Redshift that fetch size cannot be larger than 1000 rows, an error will occur if the data fetched by TROCCO exceeds 1000 rows.

The fetch size must be set to 1000 lines or less.

cope

In the Input Option tab of STEP 2 of ETL Configuration in TROCCO, change the number of records that the cursor processes at one time to 1000 or less.

Data Source file storage system (S3, GCS, etc.)

Please refer to the File/Storage System Connector for information on Connectors that may display this error message.

Error when a column of numeric or date/time type contains an unsupported character

org.embulk.spi.DataException:. Invalid record at <line number>.

Caused by: org.embulk.standards.CsvParserPlugin$CsvRecordValidateException:. java.lang.NumberFormatException: For input string: ""

Possible Causes

This error occurs when a column inferred as a numeric or date/time type contains unsupported characters (null, empty, etc.).

In TROCCO, according to Embulk specifications, an error occurs if columns of numeric and date/time types contain unsupported characters.

More specifically, this occurs in the following cases

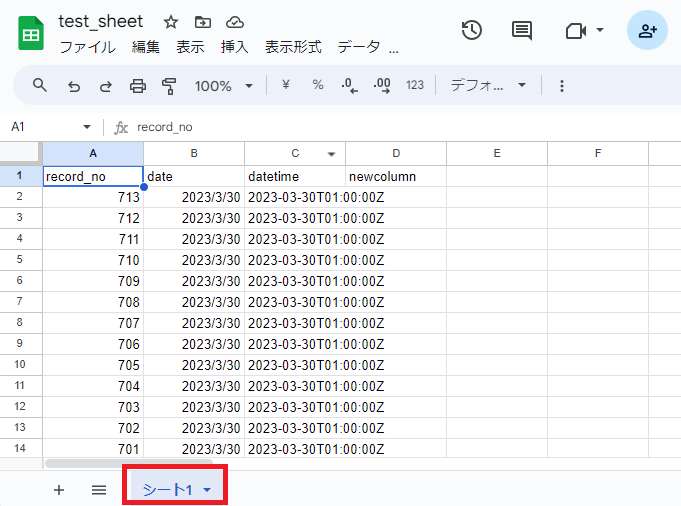

- In the Automatic Data Setting executed at the STEP2 transition of TROCCO's Transfer Date Column Setting, the data type of the column is inferred as Numeric or Date/Time type.

- Columns whose data type is inferred as numeric or date/time type contain unsupported characters.

cope

There are two possible ways to handle this.

String Conversion and Transfer

- In the Column Setting tab of the Data Setting tab of ETL Configuration STEP 2, set the Data Type of the appropriate column to string.

- Click Preview Changes.

Replace unsupported characters with any number and transfer

*Depending on the ETL Configuration, the following actions may not be taken because the columns on the Output Option tab cannot be set.

- String Conversion Conversion of the aforementioned non-target characters to arbitrary numbers in the Data Settings tab of ETL Configuration STEP2.

- For more information, see About Regular Expression Replacement.

- In the Column Setting of the Output Option tab of ETL Configuration STEP2, set the Data Setting of the relevant column to a numeric type (e.g., INTEGER type).

Data Source Salesforce

Error due to insufficient settings on the Salesforce side

Setup::Error::ConfigError: (INVALID_LOGIN) INVALID_LOGIN: Invalid username, password, security token; or user locked out.

Possible Causes

This error occurs when you cannot log in to Salesforce from TROCCO.

Specifically, the following cases may be considered

- IP restrictions are in place on the Salesforce side and TROCCO's IP address is not allowed.

- Salesforce login URL is restricted.

- The Salesforce account associated with the Connection Configuration is not authorized to use the API.

cope

- Refer to the IP addresses that need to be allowed by security groups, firewalls, etc., and allow the IP addresses.

- Please check your settings to see if you have any restrictions on the Salesforce side.

Error when a non-numeric type column contains a non-numeric value

cannot cast String to long: "-"NumberFormatException: For input string: "-"

Possible Causes

This error occurs when a numeric type column contains non-numeric characters.

In the error message above, the error is caused by a symbol (hyphen) in a numeric type column.

cope

There are two possible ways to handle this.

String Conversion and Transfer

- In the Column Setting tab of the Data Setting tab of ETL Configuration STEP 2, set the Data Type of the appropriate column to string.

- Click Preview Changes.

Replace unsupported characters with any number and transfer

*Depending on the ETL Configuration, the following actions may not be taken because the columns on the Output Option tab cannot be set.

- String Conversion Conversion of the aforementioned non-target characters to arbitrary numbers in the Data Settings tab of ETL Configuration STEP2.

- For more information, see About Regular Expression Replacement.

- In the Column Setting of the Output Option tab of ETL Configuration STEP2, set the Data Setting of the relevant column to a numeric type (e.g., INTEGER type).

Data Source kintone

Error when token is not authorized

Application ID acquisition error

An unexpected error has occurred. Please contact TROCCO's support team.

Possible Causes

This message is displayed when the app ID cannot be obtained in ETL Configuration STEP1.

This error occurs when neither "Record View Authority" nor "Record Add Authority" is granted to the token used for Connection Configuration.

cope

Please grant "Record View Authority" and "Record Add Authority" on the kintone side to the token used for Connection Configuration.

Check the official documentation for details on permissions.

Data Source Google Sheets

Error when specifying a sheet name that does not exist in the spreadsheet

Error: (ClientError) badRequest: Unable to parse range: <sheet name>

Possible Causes

This error occurs when the sheet name set in ETL Configuration STEP1 is different from the sheet name on the spreadsheet specified in the sheet URL.

cope

Open the file specified in the sheet URL and verify the following

- Does the sheet name exist?

- Are there any unintended spaces or other typographical errors in the sheet names?

Data Source Facebook Ad Insights

Error due to processing termination in the Facebook API

async was aborted because the number of retries exceeded the limit

Possible Causes

This error occurs when a request to the Facebook API takes too long (more than about 15 minutes) and the Facebook API automatically terminates the process.

This error may be caused by too much acquired data.

cope

Narrow the range of the data acquisition period in STEP 1 of ETL Configuration of TROCCO.

Data Source X Ads (Formerly Twitter Ads)

Error when the token is not authorized to use the API

{"errors":[{"code":"UNAUTHORIZED_CLIENT_APPLICATION","message":"The client application making this request does not have access to Twitter Ads API"}],"request":{"params":{}}}

Possible Causes

The following are possible causes

- Twitter Ads API usage application has not been approved.

- Tokens generated before the API usage application is approved are registered in Connection Configuration.

cope

Please confirm that your application to use the Twitter Ads API has been approved.

If you have generated a token before being approved, please apply for Twitter Ads API usage and generate a token in the following order.

- Apply to use the API

- Apply to use the Ads API

- Generate tokens

Data Source LINE Ads

Error when the date format of the data acquisition period is incorrect

code: 400. {"errors":[{"reason":"INVALID_VALUE","message":"the value is invalid","property":"since"}]}

Possible Causes

This error occurs when the date format of the data acquisition period is incorrectly specified when the Performance Report is selected as the download type in STEP 1 of ETL Configuration.

cope

Specify the date format of the data acquisition period in the format %Y-%m-%d (YYYY-MM-DD). (Example: 2023-02-01)

If Custom Variables are used, the date format for Custom Variables must also be specified in the format %Y-%m-%d.

Data Source App Store Connect API

Error when the private key in Connection Configuration is incorrect.

Error: RetryGiveupException: org.embulk.util.retryhelper. org.apache.http.HttpException: Request is not successful, code=401, body={[0x2b][0x2c]"errors": [{[0x39][0x3a][0x3b]"status": "401",[0x4c][0x4d][0x4e]"code": "NOT_AUTHORIZED",[0x68][0x69][0x6a]"title": "Authentication credentials are missing or invalid.

Possible Causes

This error occurs when not all of the private key strings are entered, such as when deleting the ---BEGIN *--- and``---END *--- sections when entering the private key registered in the Connection Configuration used for ETL Configuration.

cope

Copy the entire issued private key string, paste it into the Private Key field of the Connection Configuration, and save it.

Error when the necessary permissions are not granted to the Issuer ID in Connection Configuration

Error: RetryGiveupException: org.embulk.util.retryhelper. org.apache.http.HttpException: Request is not successful, code=403, body={[0x2b] "errors" : [ {[0x3c] "id" : "xxxxxx",[0x6f] "status" : "403",[0x85] "code" : "FORBIDDEN_ERROR",[0xa5] "title" : "This request is forbidden for security reasons",[0xe5] "detail" : "The API key in use does not allow this request"[0x125] } ][0x12b]}

Possible Causes

This error occurs when the following required permissions are not granted to the Issuer ID registered in the Connection Configuration used for ETL Configuration.

cope

Please grant the authority of Finance of "Reporting and Analytics" to the Issuer ID registered in Connection Configuration on the App Store Connect API side.

ETL Configuration

Error when acquired data does not exist at preview or job execution

Error: No input records to preview

Possible Causes

The cause of the error depends on when the error is displayed.

If an error is displayed when previewing ETL Configuration STEP2

If none of the Data Sources set in ETL Configuration STEP 1 could be retrieved, the preview display will show an error.

If the relevant error is displayed when you execute an ETL Job

If an ETL Job is executed with Schema Change Detection turned on and no data is retrieved from the Data Source, the Job will fail.

Specifically, the following cases may be considered

- Incremental Data Transfer was selected as the transfer method and no Incremental Data Transfer records were generated after the last ETL Job was executed.

- No records were generated within the specified data acquisition period.

cope

If an error is displayed when previewing ETL Configuration STEP2

- For Data Sources with ETL Configuration of Data Retrieval Period

- Extend the range of the data acquisition period to the period during which the data exist.

- Click on **"**Run Automatic Data Setting" or "Preview Changes" in STEP 2 and see if a preview appears.

- For Data Sources that allow ETL Configuration of record filtering

- If you have filtered the records by query or other means, please unfilter them.

- Click on **"**Run Automatic Data Setting" or "Preview Changes" in STEP 2 and see if a preview appears.

- For File/Storage-based Data Sources

- Verify that the file exists at the specified path.

- Make sure you have specified the path in the correct way.

If the relevant error is displayed when you execute an ETL Job

If an error is displayed due to the aforementioned causes, it is expected behavior.

Run the job again after the record has been generated.

Error when OutOfMemory occurs

See what to do when OutOfMemoryError occurs.

Error when newly added column has no default value

columns: Column src '<new_column>' is not found in inputschema. Column '<new_column>' does not have "type" and "default" Suppressed: NullPointerException

Possible Causes

This error occurs when the following conditions are met when adding a column in the Column Setting of ETL Configuration STEP 2 and previewing the changes.

- The original column must be "Add New".

- Default value is not entered (blank)

If a column is manually added in Column Setting, an arbitrary value must be entered in the Default Value field.

cope

The error is resolved by entering default values in the newly added columns and clicking Preview Changes to apply the changes.

If you do not wish to store default values in Data Destination, you can use the following method to store empty characters.

- In Column Setting, enter any value for the default value.

- Example: 999

- String Regular Expression Replacement replaces the value entered for the default value with an empty string.

- Example:

- Regular expression pattern: 999

- String to be replaced: Enter nothing (blank)

- Example:

As a result of the above method, an empty character is stored.

If you wish to store NULLs, consider using Programming ETL.

Error when there is a problem with Programming ETL

Error: org.embulk.exec.ExecutionInterruptedException:. java.lang.Exception:. Internal API Error

Possible Causes

This error occurs when there is an error in the code written in the Programming ETL, or when the amount of processing data is too large and the memory allocated for the Programming ETL is used up.

cope

Please check the code and modify the code to reduce the amount of data to be processed.

Data Source and Data Destination Services provided by Google

Error when Connection Configuration for services provided by Google is incorrect.

org.embulk.exec.PartialExecutionException: java.lang.RuntimeException: java.lang.IllegalArgumentException:

Caused by: java.lang.IllegalArgumentException: expected primitive class, but got: class com.google.api.client.json.GenericJson

Possible Causes

This error occurs when Connection Configuration for services provided by Google (e.g., Google BigQuery, Google Sheets, Google Drive, etc.) is created using a JSON key and the content of the JSON key is incorrect.

This error is often caused by entering incomplete JSON keys when creating Connection Configuration.

cope

- Open the created JSON key value in any text editor, select all the text, and copy it.

- Paste into the JSON key entry field on the Connection Configuration Edit screen to save the Connection Configuration.