summary

Only data that has changed can be identified and transferred to BigQuery using the CDC (Change Data Capture) method from MySQL's binlog.

This will result in reduced transfer volume and faster transfers.

Full Data Transfer must be done on the first run. Subsequently, in Incremental Data Transfer, only the Incremental Data Transfer is linked using the CDC method.

essential condition

MySQL Support Chart

| Master | Read Replica | Supported Versions | |

|---|---|---|---|

| Amazon Aurora MySQL RDS | ○ | x | 5.6.2+ |

| Amazon MySQL RDS | ○ | ○ | 5.6.2+ |

| Google CloudSQL MySQL | ○ | x | 5.6.2+ |

| MariaDB | ○ | ○ | 5.6.2+ |

| MySQL | ○ | ○ | 5.6.2+ |

MySQL Parameter Settings

[mysqld]

binlog_format=ROW

binlog_row_image=FULL

expire_logs_days=7

binlog_expire_logs_seconds=604800

log_bin=mysql-binlog

log_slave_updates=1

The supplemental explanation is as follows

-

It may be necessary to restart the DB for the binlog settings to take effect.

-

Column:

binlog_expire_logs_seconds

MySQL 8.0.1 or later must be specified.

Seven days is specified, but can be shortened.

It must be sufficiently longer than the frequency of each transfer. -

Column:

expire_logs_days

Set if MySQL 8.0.0 or lower.

Seven days is specified, but can be shortened.

It must be sufficiently longer than the frequency of each transfer. -

Column:

log_bin

You are free to change it as you wish. -

Column:

log_slave_updates

Set when retrieving binlog from Read Replica. -

RDS MySQL

Theexpire_logs_daysshould be set using themysql.rds_set_configurationprocedure.

Reference: https://docs.aws.amazon.com/ja_jp/AmazonRDS/latest/UserGuide/USER_LogAccess.Concepts.MySQL.html -

Cloud SQL

Enablepoint-in-time recovery.

Reference: https://cloud.google.com/sql/docs/mysql/backup-recovery/backups?authuser=2#tips-pitr

Authority of connecting users

The following three authorizations are required

SELECT

- Get Data

- Table schema information reference

Example: The

GRANT SELECT ON `<database_name>`.`<table_name>` to `<username>``@'%'`;

REPLICATION CLIENT、REPLICATION SLAVE

Example: The

GRANT REPLICATION CLIENT, REPLICATION SLAVE ON *.* TO `<username>`@'%';

Primary Key in Data Source table

The Primary Key must be set in the ETL Configuration for the Data Source table.

Multiple Primary Keys (Composite Primary Key) are also supported.

Conversion function during transfer

MySQL to BigQuery CDC transfer supports the following

- Column Drop

- Column Data Conversion

- Change column name

Data transformation of columns is CAST in BigQuery. So we follow BigQuery's CAST rules.

For more information, please see below.

https://cloud.google.com/bigquery/docs/reference/standard-sql/conversion_rules?hl=ja

metadata

A column named _trocco_fetched_at is added and linked to the DWH.

This column has as its value the time the transfer was initiated by TROCCO's ETL Job.

The string _trocco_fetched_at cannot be used as the column name due to the convenience of adding this column.

Data Source MySQL SQL support

- INSERT

- UPDATE

- DELETE

- Deleted data will be logically deleted on the DWH side.

SQL with limitations

ALTER TABLE ... RENAME COLUMN old_col_name TO new_col_name

If schema tracking is enabled, data will be linked with the changed name.

Columns that are already linked will not be deleted and will have a value of NULL.ALTER TABLE ... ADD col_name date_type

Only when schema tracking is enabled, the transfer is automatic.

When adding columns, please configure them in the admin panel before adding MySQL columns.

If the columns are already linked after they are added, data may be missing.ALTER TABLE ... DROP col_name

Data linked to the DWH will not be deleted and will be NULL after deletion.ALTER TABLE ... MODIFY col_name column_definition

If the changed type is type-changeable, it can continue to be transferred.

If an error occurs, a Full Data Transfer must be performed.

Unsupported SQL

The following SQL cannot be caught by binlog, so the data will not be transferred correctly to the DWH.

- TRUNCATE

- DROP

- LOAD

- RENAME

- CASCADING DELETES

- CASCADING UPDATES

- ALTER TABLE ... SET DEFAULT

If the data is already linked and is NULL, it will remain NULL and will not be updated.

MySQL and BigQuery schema types

The data type conversion function allows you to convert data types that can be changed to the type you specify.

The default response is as follows Binary data is converted to hexadecimal and treated as a STRING.

| MySQL Data Types | BigQuery Data Types |

|---|---|

| BINARY BLOB LONGBLOB MEDIUMBLOB TINYBLOB VARBINARY CHAR ENUM LONGTEXT MEDIUMTEXT SET TIME TINYTEXT VARCHAR |

STRING |

| BIT TINYINT |

BOOLEAN |

| BIGINT INT MEDIUMINT SMALLINT YEAR |

INTEGER |

| DECIMAL NUMERIC DOUBLE FLOAT |

FLOAT |

| DATE | DATE |

| TIMESTAMP DATETIME |

TIMESTAMP |

| GEOMETRY GEOMETRYCOLLECTION JSON LINESTRING MULTILINESTRING MULTIPOINT MULTIPOLYGON POINT POLYGON |

STRING (STRUCT is currently not supported.) |

| CURVE MULTICURVE MULTISURFACE |

SURFACE Not supported. |

Configuration Flow

1. create ETL Configuration

Select Data Source MySQL binlog and Data Destination BigQuery and create ETL Configuration.

*Be sure to press "Save BigQuery Column Settings" in "STEP2 Data Preview and Advanced Settings" to save the settings.

2. initial Full Data Transfer

For the first time only, Full Data Transfer is performed to transfer all the current MySQL tables to BigQuery.

On the Run New Job screen, check the Do Full Data Transfer checkbox and run the job.

3. Incremental Data Transfer Schedule Configuration

Please register your schedule from the Schedule Setup screen.

A Job will be started at the Job Setting frequency and the differences will be captured.

If Full Data Transfer is required

Full Data Transfer is required in the following cases

- Initial Transfer

- Runtime error on type conversion on BigQuery.

- I want to erase all data, not logical deletion.

Delete the transferred table before executing. - If you execute the query described in "Data Source MySQL SQL Response

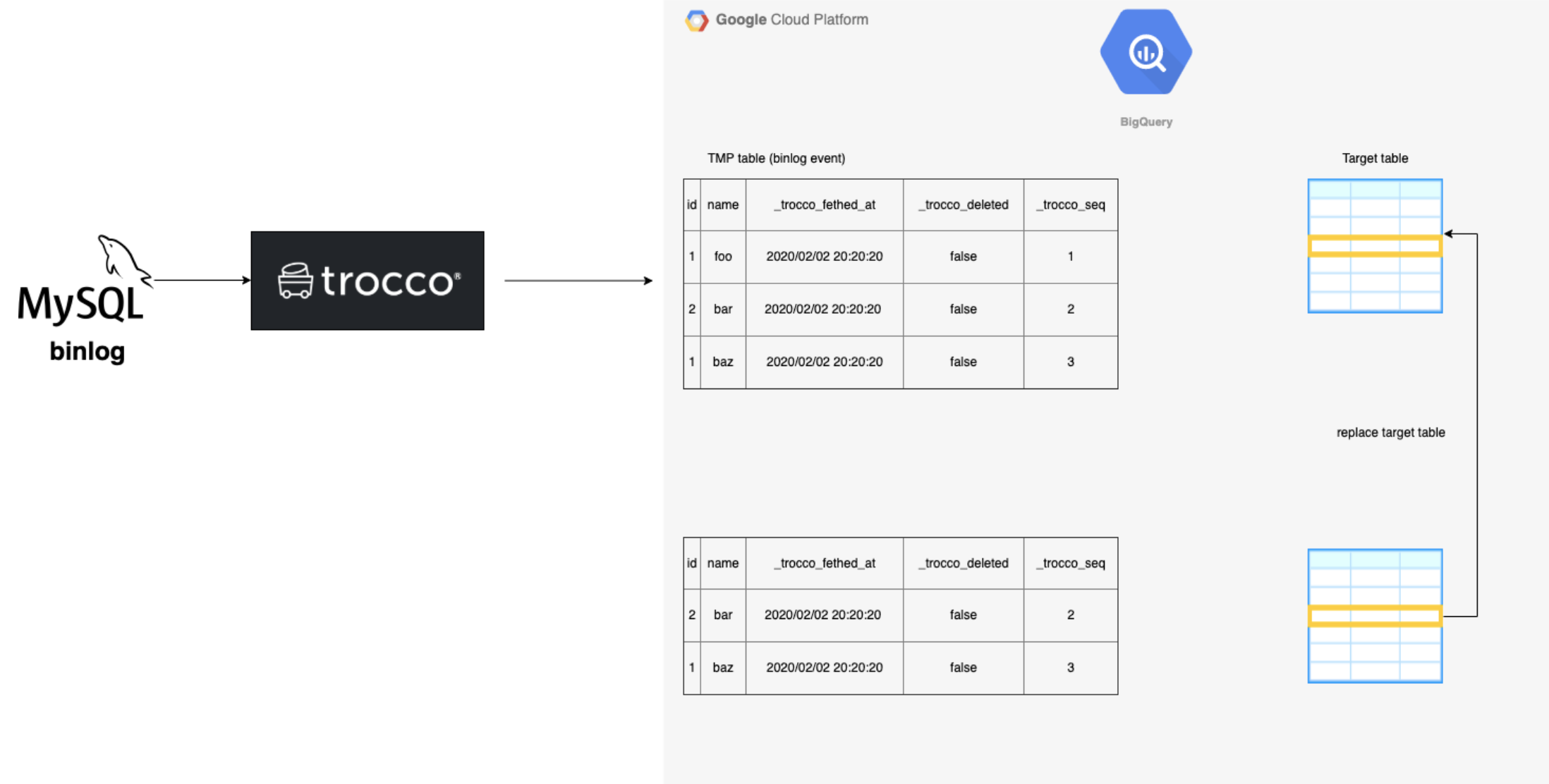

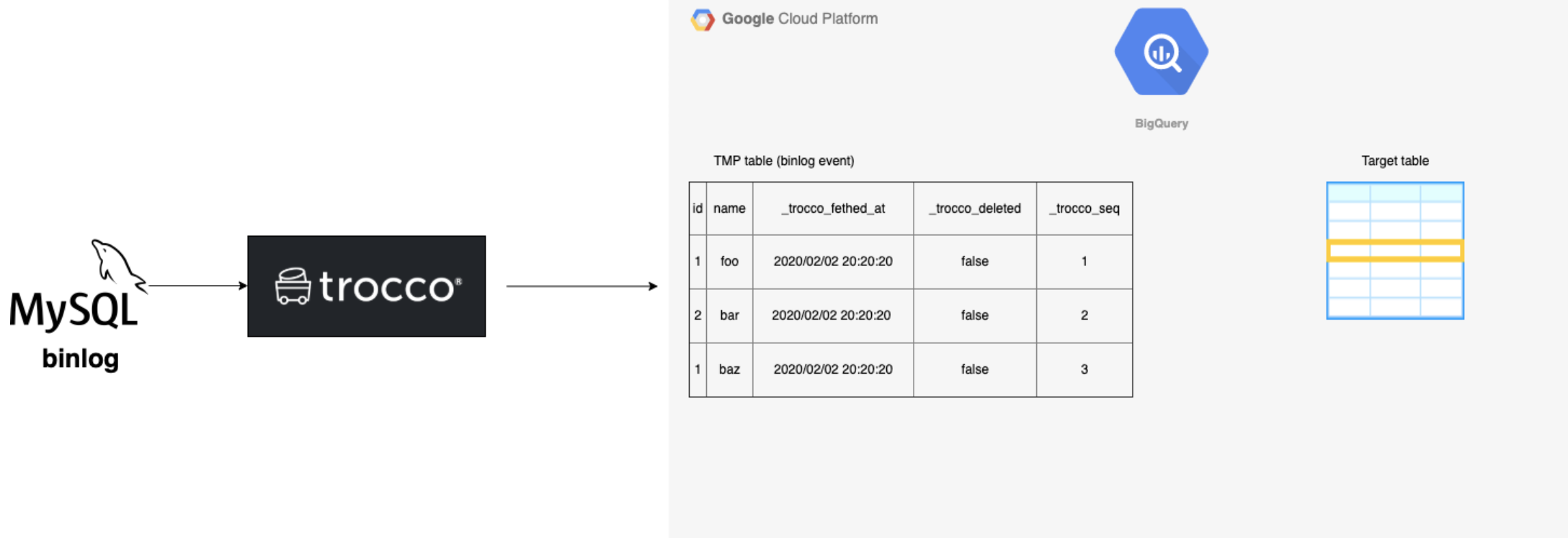

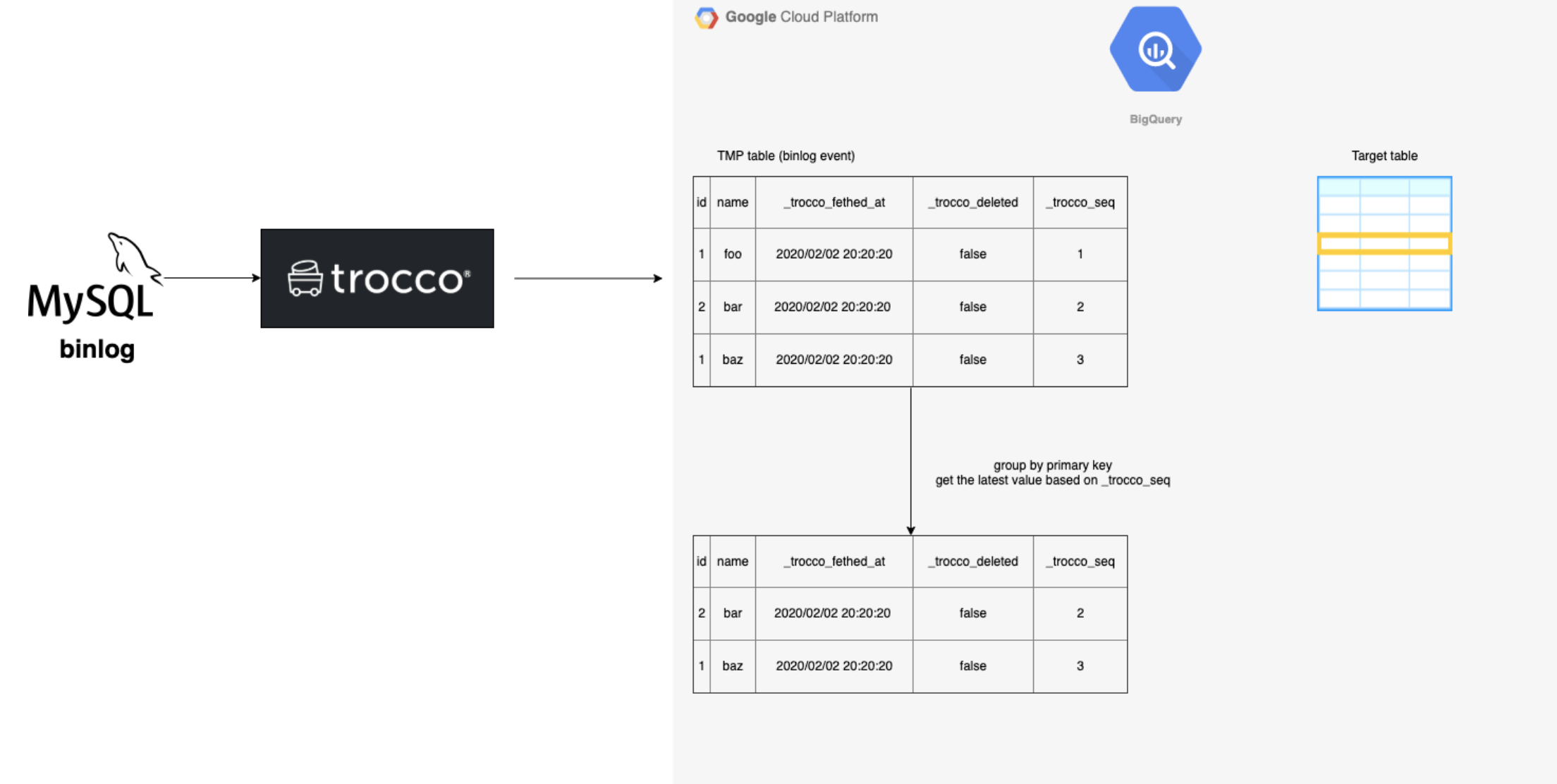

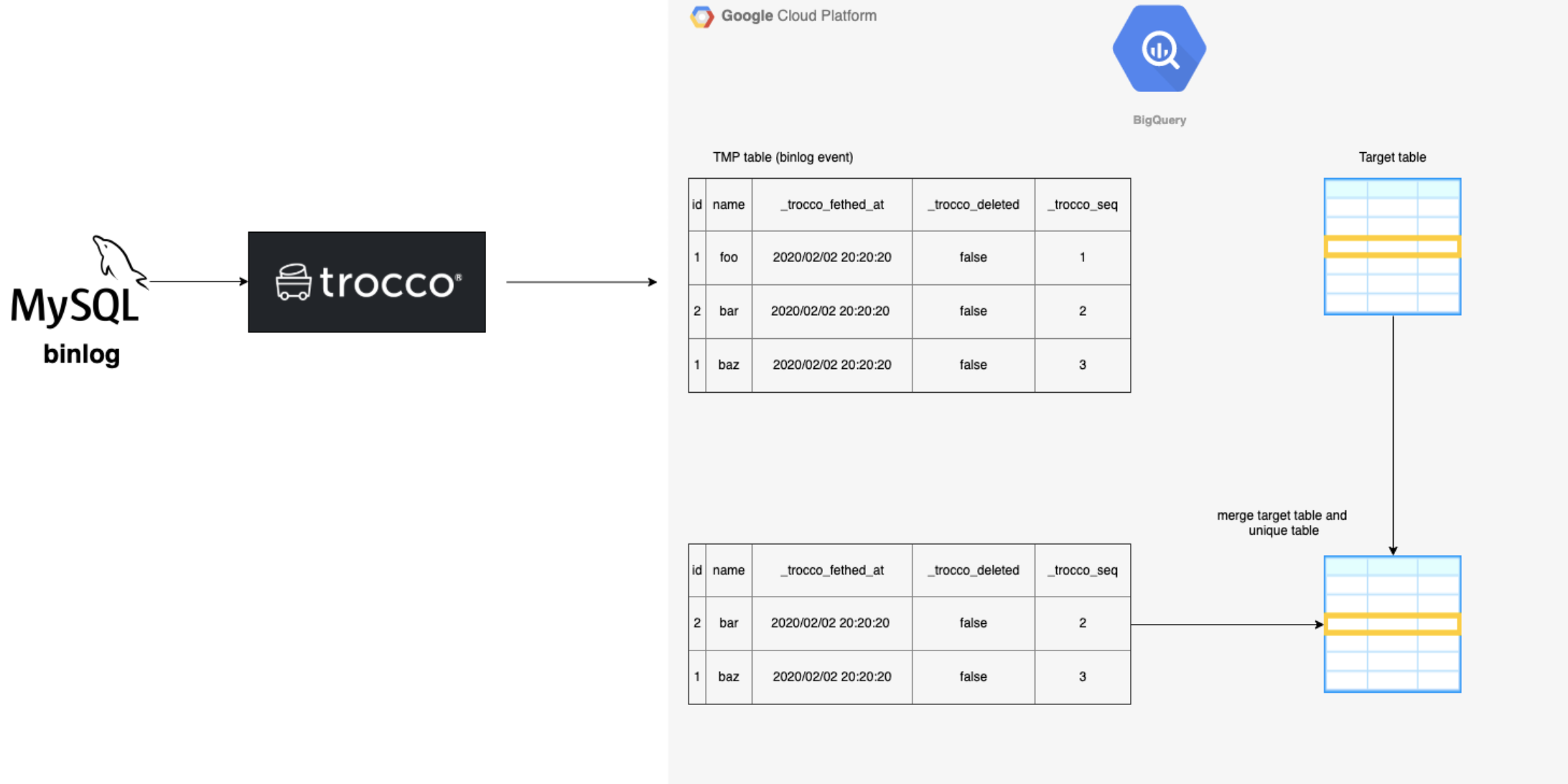

technical overview

Overview of the technical aspects of CDC

target table: the table where the final data will be placed.

-

TROCCO reads the MySQL binlog and transfers the binlog of the configured table onto the BQ.

-

With binlog data, there are duplicates, so we create a set of the most recent records for each record.

-

Merge the tables created in step 2 with the tables already linked.

-

Replace the table merged in 3 with the target table.