This page contains weekly releases.

2024-07-29

UI・UX

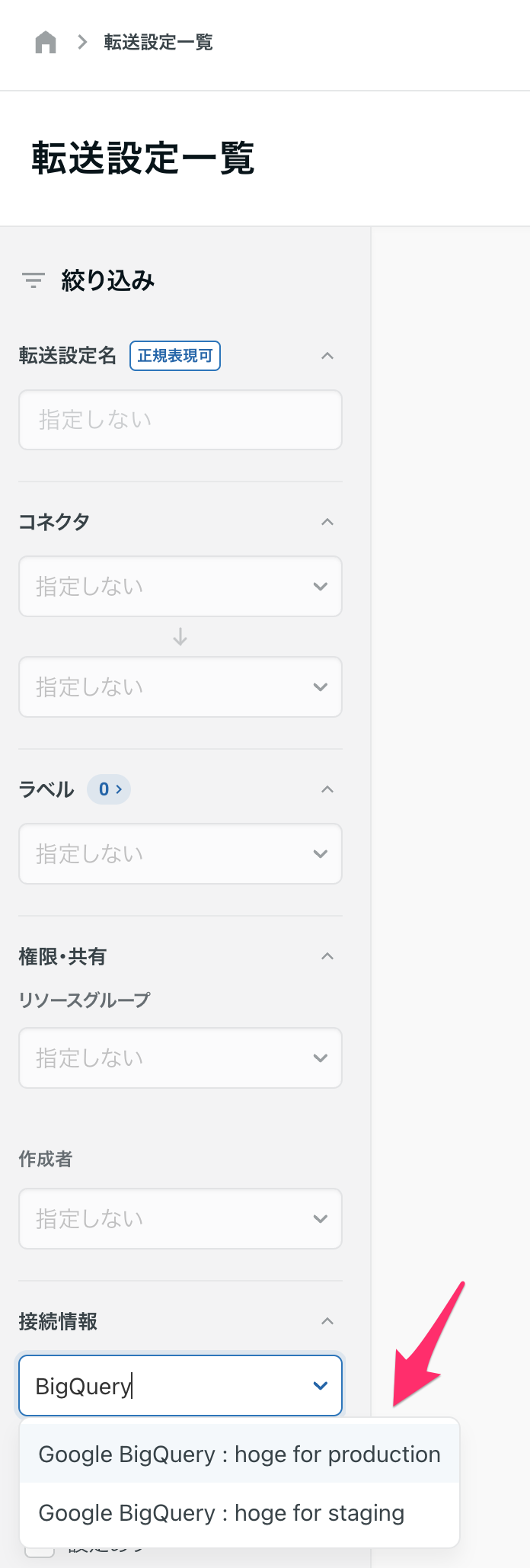

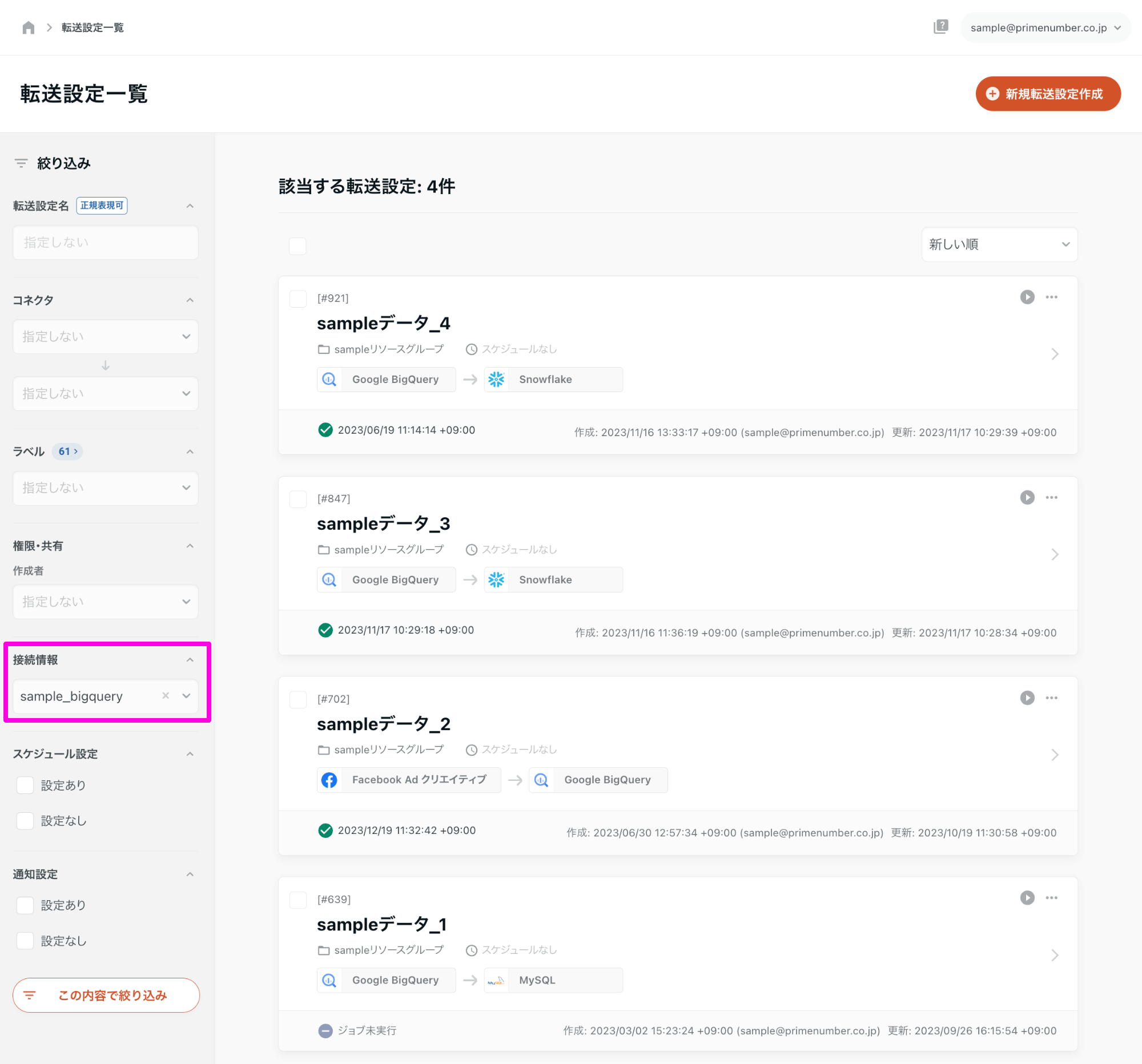

ETL Configuration list can now be filtered by Connection Configuration type.

In the last update, filtering by Connector was supported.

In response to the above, this update supports filtering by individual Connection Configuration.

This makes it easy to see which Connection Configuration is used for any given ETL Configuration.

2024-07-22

UI・UX

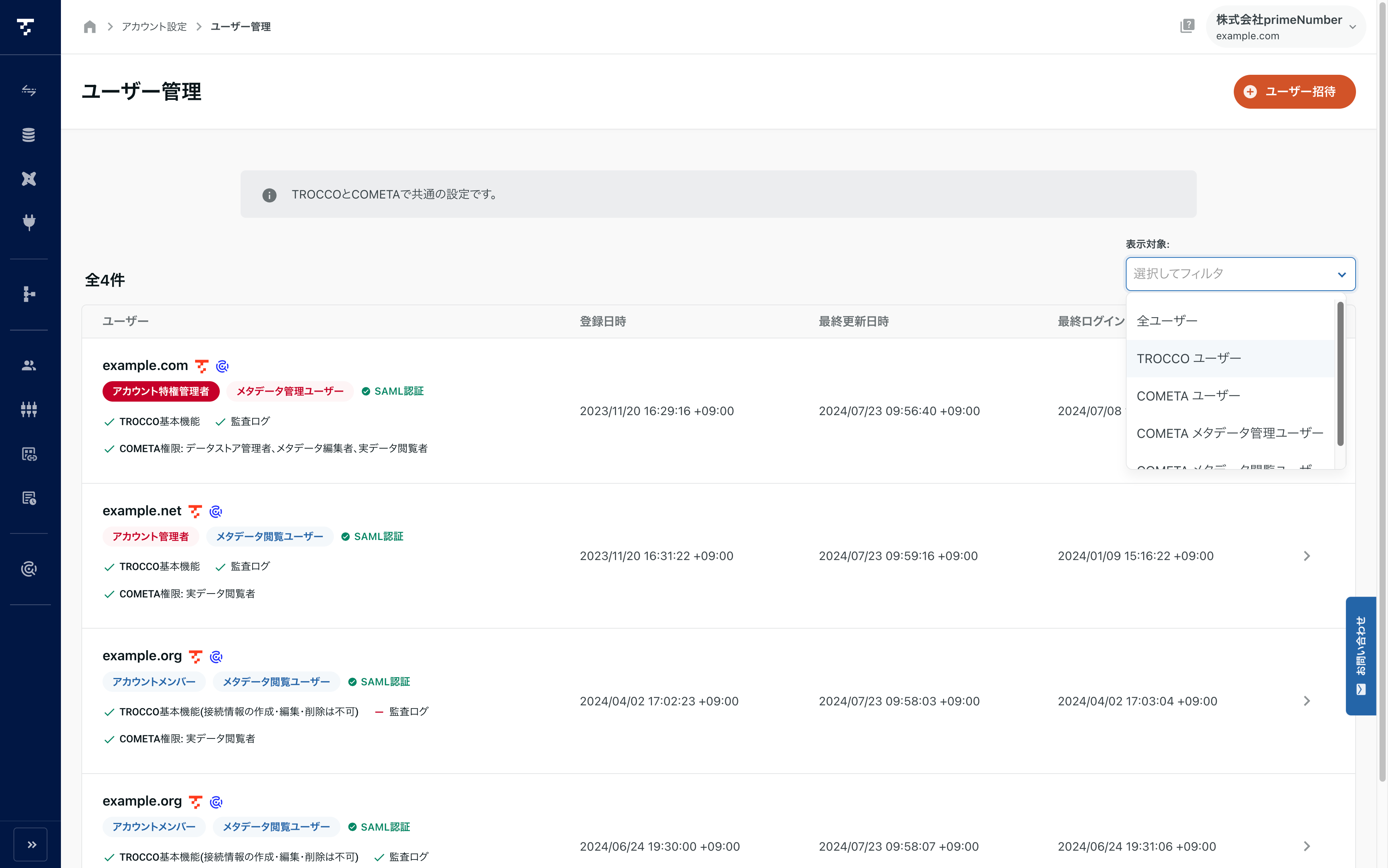

Changes to user management screens

The design of the user management screen has been changed.

This allows each user's permissions (operations allowed on TROCCO) to be checked at a glance.

When used in conjunction with COMETA, users can also be narrowed down.

2024-07-16

ETL Configuration

Data Source Databricks

New Data Source Databricks added.

For more information, see Data Destination - Databricks.

Notice

Increased memory size for ETL Job

The release within the week of 07/16/2024 will increase the memory size used for data transfer.

- Memory size before change: 2 GiB

- Modified memory size: 6 GiB

This change applies to ETL Configurations created after the above release.

Since the ETL Job will be up-specified, performance may be improved compared to the job before the change.

However, the following Connectors are exceptions to the current memory size of 15 GiB, and the 15 GiB will continue to apply after this change.

List of Connectors with exceptional memory size of 15 GiB

Elimination of Direct and Aggregate transfer functions

The following transfer functions have been eliminated

- Direct Transfer (selectable when Data Source Amazon S3 -> Data Destination SFTP combination is used)

- Aggregate Transfer (selectable when Data Source Google BigQuery -> Data Destination Amazon S3 combination)

ETL Configuration

When an OutOfMemoryError occurs, the execution log will clearly indicate this.

When an OutOfMemoryError occurs, it is now clearly indicated in the execution log.

If this message is displayed, please refer to the section on what to do when OutOfMemoryError occurs.

Input restrictions added for Data Source HTTP and HTTPS

ETL Configuration STEP2 > Input Option now has an upper and lower limit for the value that can be entered for each setting item.

For more information, see Data Source - HTTP/HTTPS.

2024-07-01

Notice

Google Analytics (Universal Analytics) Discontinued Support

In response to Google's discontinuation of Universal Analytics, the following Connectors will be discontinued on July 01, 2024.

- Data Source - Google Analytics

- Data Destination - Google Analytics Measurement Protocol

After 07/01/2024, it will no longer be possible to create new ETL Configuration and Connection Configuration. Also, running a Job from ETL Configuration will result in an error.

Please consider switching to Google Analytics 4 and using Data Source - Google Analytics 4 and Google Analytics 4 Connection Configuration in the future.

2024-06-24

ETL Configuration

Data Destination Databricks

New Data Destination Databricks added.

For more information, see Data Destination - Databricks.

2024-06-17

Notice

Limit job execution by the maximum number of simultaneous executions

TROCCO limits the number of jobs that can run simultaneously within an account.

Following the rate plan change in 04/2024, data mart jobs are now also subject to this limitation.

For more information on this limitation, please refer to Job Concurrency Limit.

dbt linkage

Compatible with dbt versions 1.7 and 1.8

dbt Core v1.7 and dbt Core v1.8 can now be specified.

The dbt version can be selected from the dbt Git repository.

2024-06-10

Notice

Restrictions on Job Execution in the Free Plan

If you are using the Free plan, you can no longer run jobs when the cumulative monthly processing time exceeds the processing time quota.

The accumulated processing time returns to 0 hours at midnight (UTC+9) on the first day of the following month. If any jobs were not executed, they should be rerun in the following month.

ETL Configuration

Record ID can be specified as update key in update/upsert of Data Destination kintone.

Record IDs can now be specified as update keys.

If you wish to specify a record ID, enter $id as the update key.

2024-06-03

API Update

Data Destination Google Ads Conversions

Regarding extended conversions, the version of the Google Ads API used for transfer has been updated from v14.1 to v16.

Please refer to the Google Ads API documentation for information on the new version.

2024-05-27

Data Mart Configuration

Datamart Snowflake

The write setting for the output destination table can now be selected between TRUNCATE INSERT and``REPLACE for the all-wash mode.

- In the case of

TRUNCATE INSERT, the schema of the existing table is not deleted. - In the case of

REPLACE, the schema of the existing table is deleted.

For more information on the difference between the two, see Data Mart - Snowflake.

API Update

Data Source Google Ads / Data Destination Google Ads Conversions

The version of Google Ads API used in the transfer was updated from v14.1 to v16.

Regarding Data Destination Google Ads Conversions, only offline conversions have been updated.

Extended conversion updates will be available next week.

Please refer to the Google Ads API documentation for information on the new version.

Search Ads and Data Source Yahoo! Search Ads and Data Source Yahoo! Display Ads (managed)

The version of YahooAdsAPI used for transfer has been updated from v11 to v12.

Please refer to the following documents for each new version

- Yahoo! Search Ads API | Search Ads API v12 Release Notes

- YahooAdsAPI | Display Ads API v12 Release Notes

2024-05-20

Notice

API Update for Data Source Google Ads

On Wednesday, May 22, 2024, between 10:00 am and 4:00 pm, there will be an update to the Google Ads API used by Data Source Google Ads.

Some disruptive changes will occur with the API update.

Please see 2024/05/16 Destructive Changes to Data Source Google Ads for more information on the resources/fields that will be removed or changed and what to do about it.

2024-05-13

Notice

On May 9, 2024, we announced our brand renewal.

With the rebranding, the logotype of the product was changed from TROCCO``to TROCCO, as well as the color scheme of the logo.

The new logo image files and logo guidelines are available on our website.

Also, please refer to the press release regarding the brand renewal.

Connection Configuration

Allow SSH private key passphrase to be entered in Microsoft SQL Server Connection Configuration.

Added SSH private key passphrase to the configuration item.

This allows you to connect to Microsoft SQL Server with a private key with a passphrase.

2024-04-30

security

TROCCO API is now restricted by IP address when executed.

Execution of the TROCCO API is now subject to IP address restrictions.

This allows for more secure use of the TROCCO API.

If you are already using the TROCCO API and have set Account Security >AllowedIP Addresses for at least one IP address, you must add the IP address used to run the TROCCO API to the Allowed IP Addresses.

Data Mart Configuration

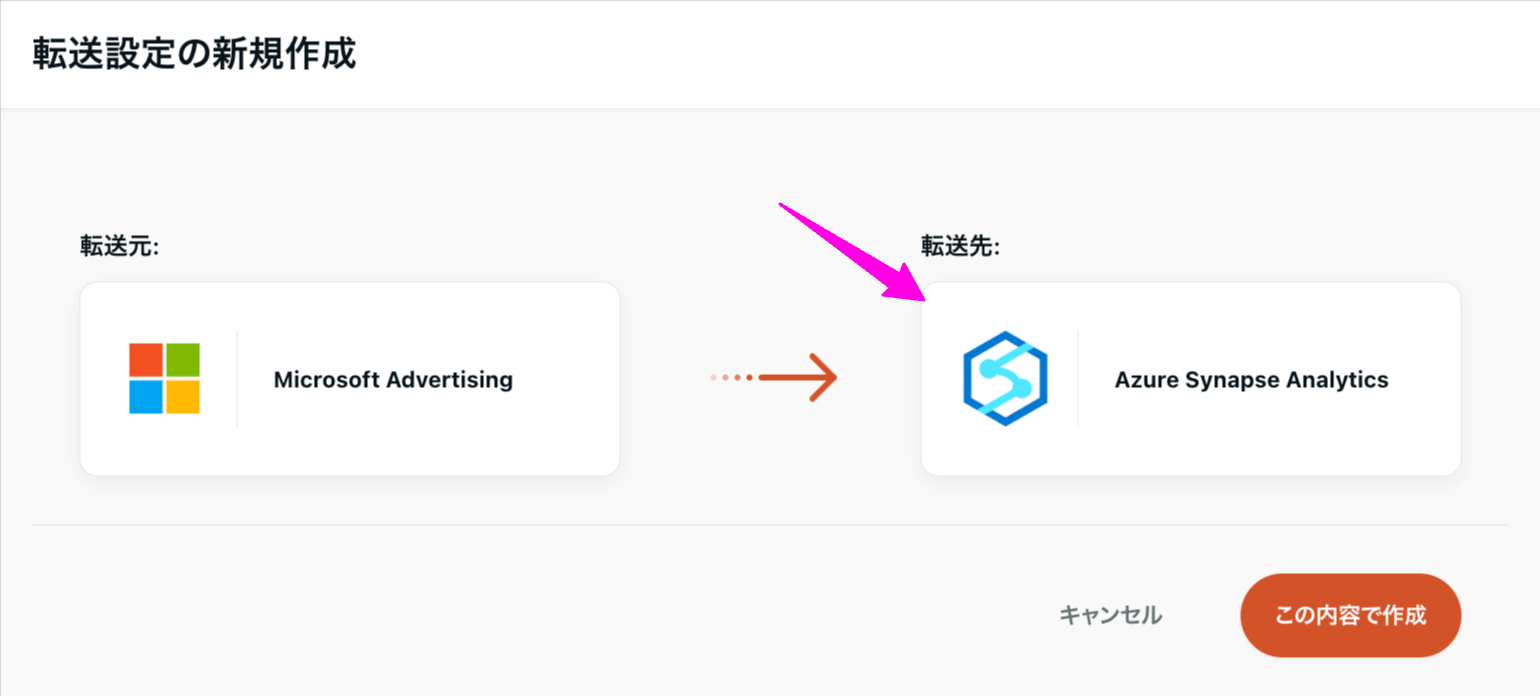

Data Mart Azure Synapse Analytics

New data mart Azure Synapse Analytics has been added.

For more information, see Data Mart - Azure Synapse Analytics.

ETL Configuration

Data Source Google Sheets columns can be extracted.

Previously, it was necessary to manually enter the name and data type of the column to be retrieved in ETL Configuration STEP 1.

In contrast, the ability to extract column information from a spreadsheet to be transferred has been added.

After entering the various setting items, click Extract Column Information to automatically set the column name and Data Setting.

With the addition of the above functionality, an entry field has been added to specify the starting column number for capturing.

For more information, see Data Source - Google Spreadsheets.

2024-04-22

ETL Configuration

Search Ads and Data Source Yahoo! Search Ads and Data Source Yahoo! Display Ads (managed)

Due to the discontinuation of YahooAdsAPI v11, the Base Account ID entry field has been added to the following Data Source Connector Configuration in order to update the version of Yahoo!

- Data Source Yahoo! Search Ads

- Data Source Yahoo! Display Ads (managed)

For details, see "MCC Multi-Tiered" in v12 Upgrade Information.

The transition to v12 is scheduled for mid-May 2024.

As soon as the migration to v12 is complete, ETL Job Settings with a Base Account ID not yet entered will be marked as an error.

Please edit your existing ETL Configuration prior to v12 migration.

2024-04-15

ETL Configuration

Data Destination kintone to be able to transfer to table

Data can now be transferred to tables (formerly subtables) in the kintone application.

For details on how to transfer, please refer to Updating Tables (formerly Subtables) in the Data Destination kintone application.

Data Source Google BigQuery allows users to select "Bucket Only" for temporary data export specification.

When transferring data from Data Source Google BigQuery, data is temporarily output to Google Cloud Storage.

Only buckets can now be specified as the output destination for temporary data in this case.

Note that the conventional format of entering a Google Storage URL will output temporary data to the same path, unless Custom Variables are used.

As a result, data on Google Cloud Storage could be overwritten.

On the other hand, if only buckets are specified, an internally unique path is created and temporary data is output to that path.

This avoids the aforementioned situation where data in Google Cloud Storage is overwritten and deleted.

2024-04-08

UI・UX

Allow organization name to be set in TROCCO account

You can now set an organization name for your TROCCO account.

The organization name makes it easier to identify which TROCCO account you are logging into if you are managing multiple TROCCO accounts, for example.

For more information, see About Organization Names.

Managed ETL

Add Amazon Redshift as a Data Destination

Amazon Redshift can now be selected as a Managed ETL Data Destination.

ETL Configuration, which retrieves Data Sources from a batch of Data Sources and transfers them to Amazon Redshift, can be created and managed centrally.

API Update

Data Source Google Ad Manager

The version of the Google Ad Manager API used during transfer has been updated from v202305 to v202311.

For more information on the new version, see Google Ad Manager API.

2024-04-01

Notice

Effective April 1, 2024, the rate plan will be revised.

For details, please refer to the fee plan.

ETL Configuration

Data Source Shopify supports retrieval of collections.

Data Source Shopify targets can now select a collection object.

See Data Source - Shopify for more information, including each item to be retrieved.

Additional types can be specified in Data Destination Amazon Redshift

The following items have been added to the Data Type in the STEP2 Output Option Column Setting for ETL Configuration Amazon Redshift.

TIMEDATE

Update Processing Configuration for NULL values transferred in Data Destination kintone added.

When the update data for an existing record in kintone contains a null value, you can now select the update process for that record.

You can choose to update with NULL or****skip updating in the advanced settings of ETL Configuration STEP 1.

2024-03-25

ETL Configuration

Data Destination Azure Synapse Analytics

New Data Destination Azure Synapse Analytics.

For more information, see Data Destination - Azure Synapse Analytics.

2024-03-18

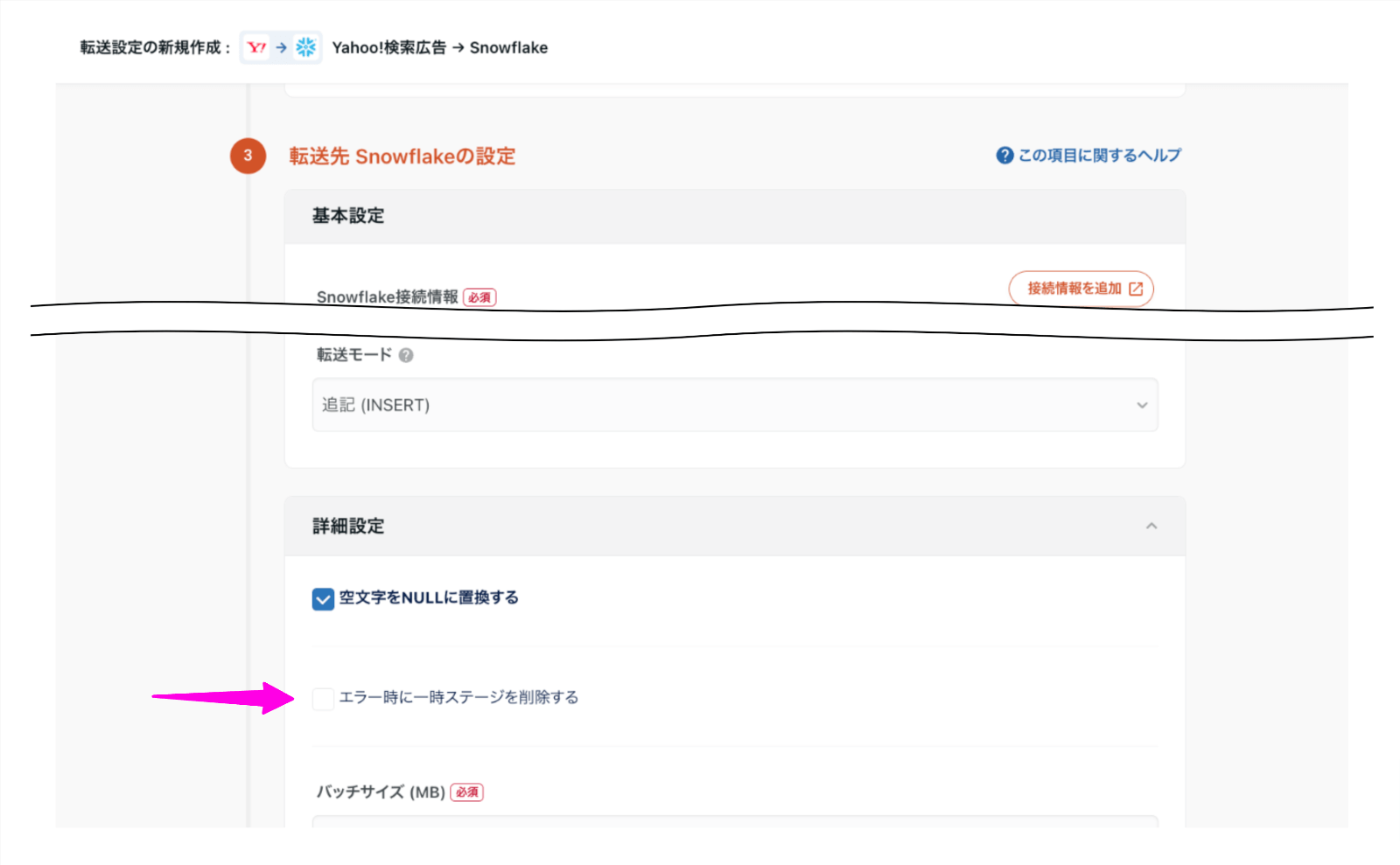

ETL Configuration and Managed ETL

Temporary stage can be deleted when ETL Job to Snowflake fails.

If an ETL Job to Snowflake fails, you can now choose to delete the temporary stage.

For more information, see Data Destination - Snowflake > STEP1 Advanced Configuration.

UI・UX

Maximum number of ETL Configuration Data Setting data previews changed to 20.

The maximum number of data items displayed in the Data Preview of ETL Configuration STEP2 and ETL Configuration Details has been changed to 20.

This change shortens the time it takes for the data preview to appear in the preview of schema data in E TL Configuration STEP 2.

API Update

Data Destination Facebook Custom Audience(Beta Feature)

The version of the Facebook API used for transfer has been updated from v17 to v18.

Please refer to the Meta for Developers documentation for the new version.

2024-03-11

Workflow

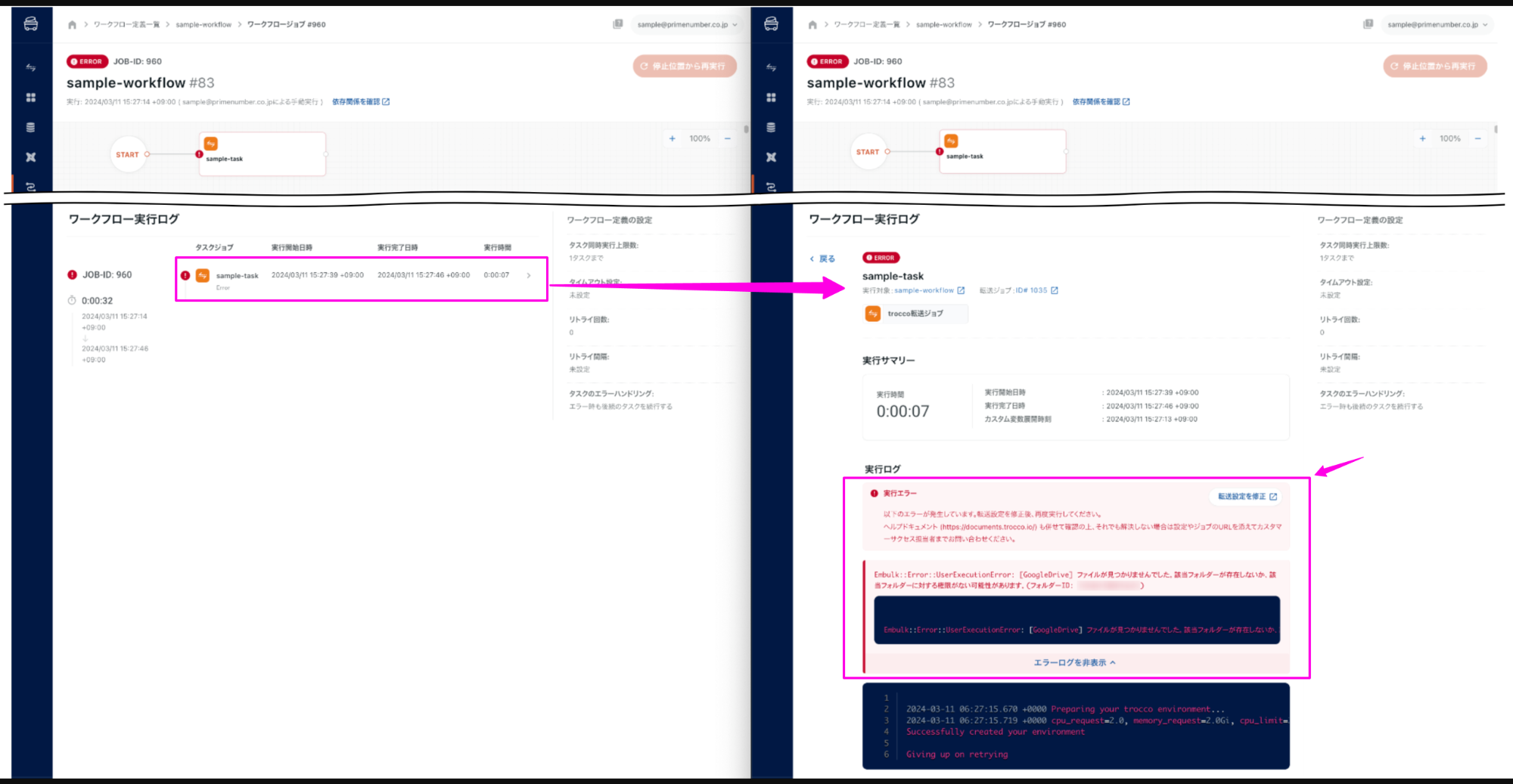

Allow the error log of an ETL Job to be viewed within the execution log of a Workflow Job.

When an ETL Job embedded in a workflow fails, the error log for the relevant ETL Job can now be viewed from the workflow execution log.

You can check the error log by clicking the corresponding task in the workflow execution log.

API Update

The Facebook API used for the following Connector has been updated from v17 to v18.

- Data Source Facebook Ad Insights

- Data Source Facebook Ad Creative

- Data Source Facebook Lead Ads

- Data Destination Facebook Conversions API

Please refer to the Meta for Developers documentation for the new version.

audit log

Removed "Restore Past Revisions" action in ETL Configuration from audit log capture.

Removed "Update ETL Configuration (restore past revisions of change history)" from actions eligible for audit logging.

For more information, please refer to the Change History of the Audit Log function.

2024-03-04

Notice

About TROCCO Web Activity Log Help Documentation

Until now, the help documentation for TROCCO Web Activity Log has been available on the Confluence space.

The help documentation has now been transferred to the TROCCO Help Center. Please refer to the TROCCO Web Activity Log in the future.

The help documentation on the Confluence side will be closed soon.

UI・UX

ETL Configuration list can now be filtered by Connection Configuration.

Connection Configuration has been added as a filter item in the ETL Configuration list.

You can filter by the ETL Configuration in which the specified Connection Configuration is used.

For more information, see List of ETL Configurations > Filter.

ETL Configuration

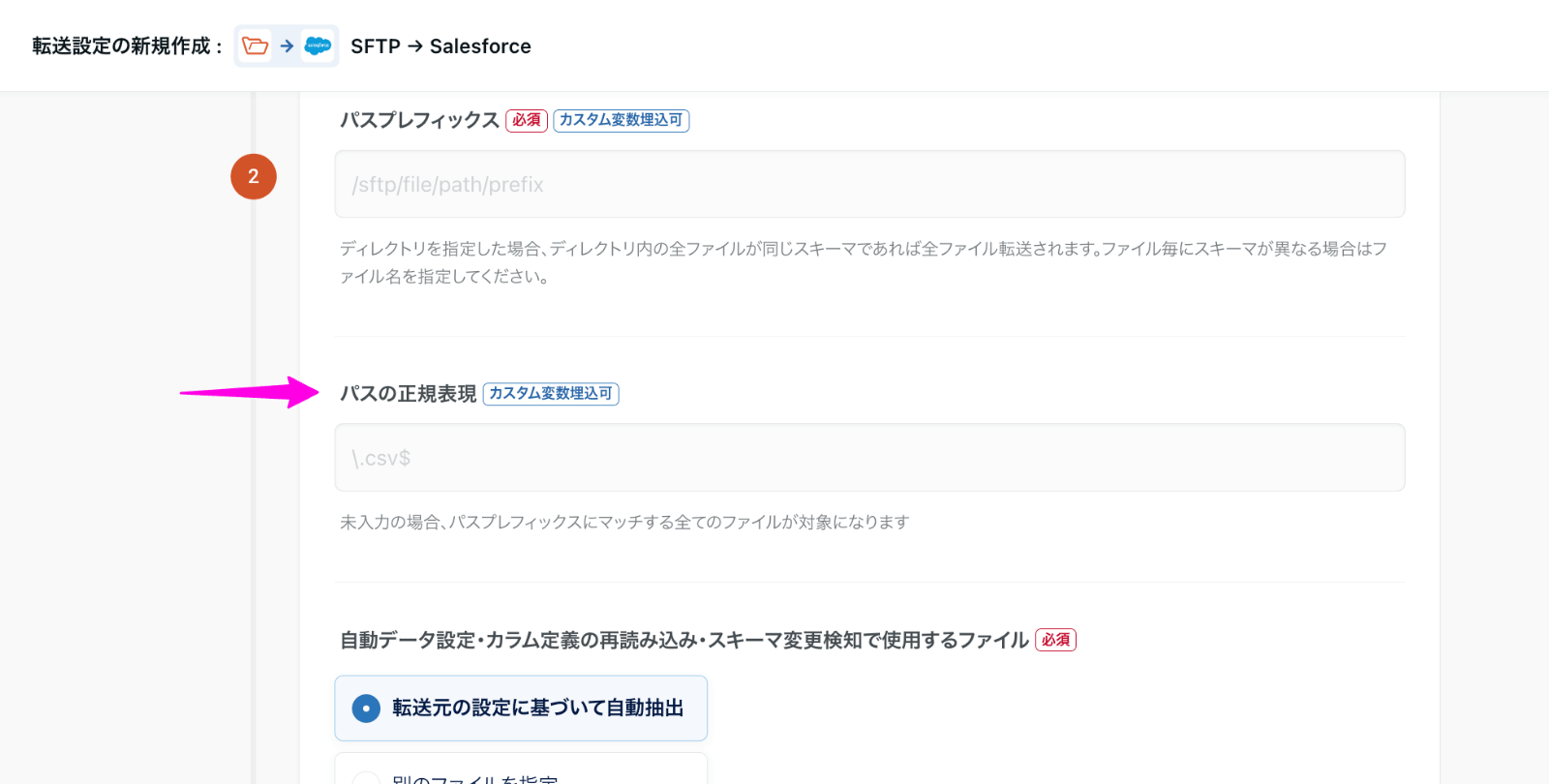

Allow regular expressions to be used to specify the path to the file to be retrieved by Data Source SFTP.

In ETL Configuration STEP1, the path to the file to be retrieved can now be specified with a regular expression.

For example, if you enter .csv$ in the path regular expression, only csv files under the directory specified by the path prefix will be retrieved.

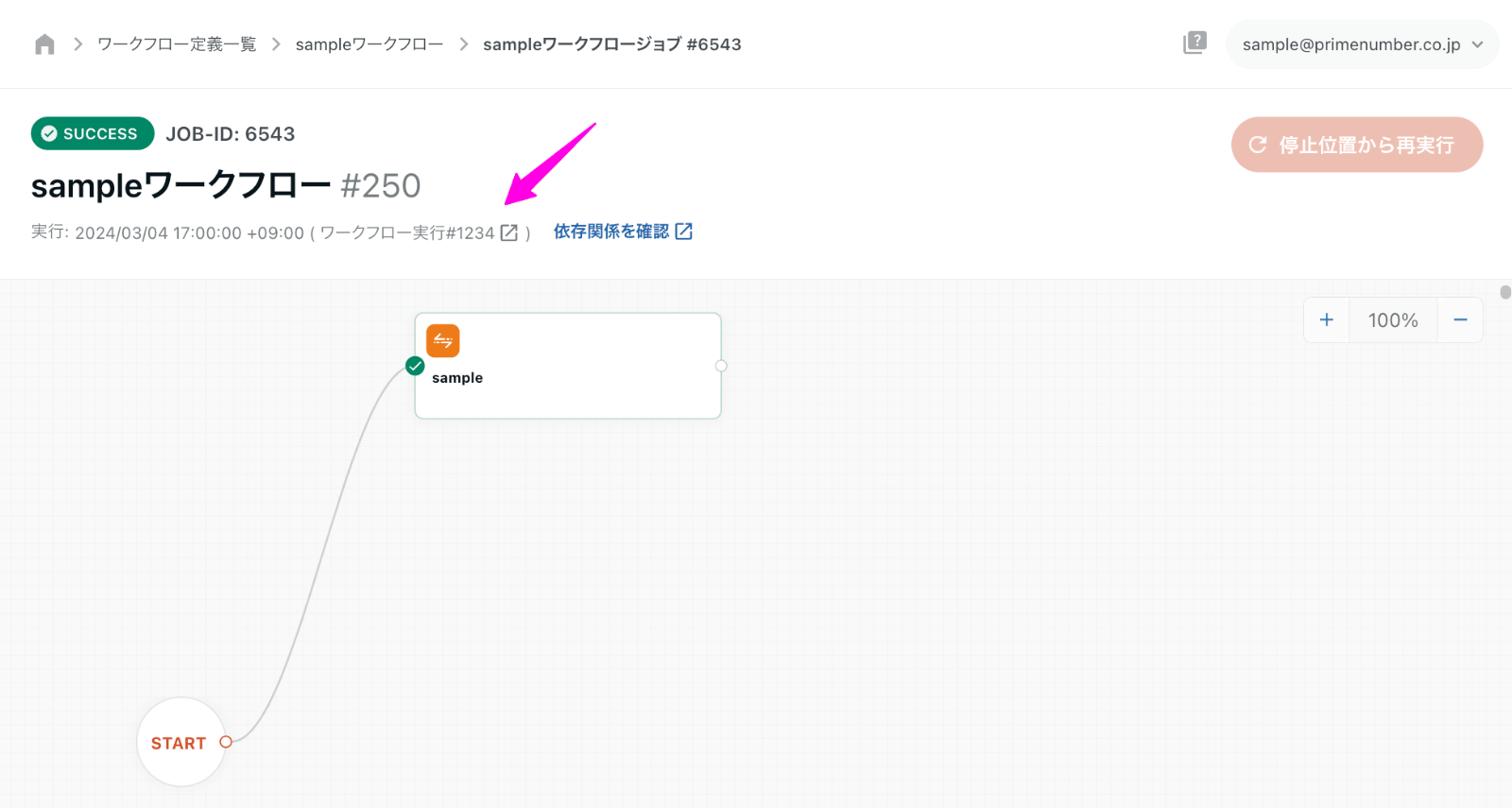

Workflow

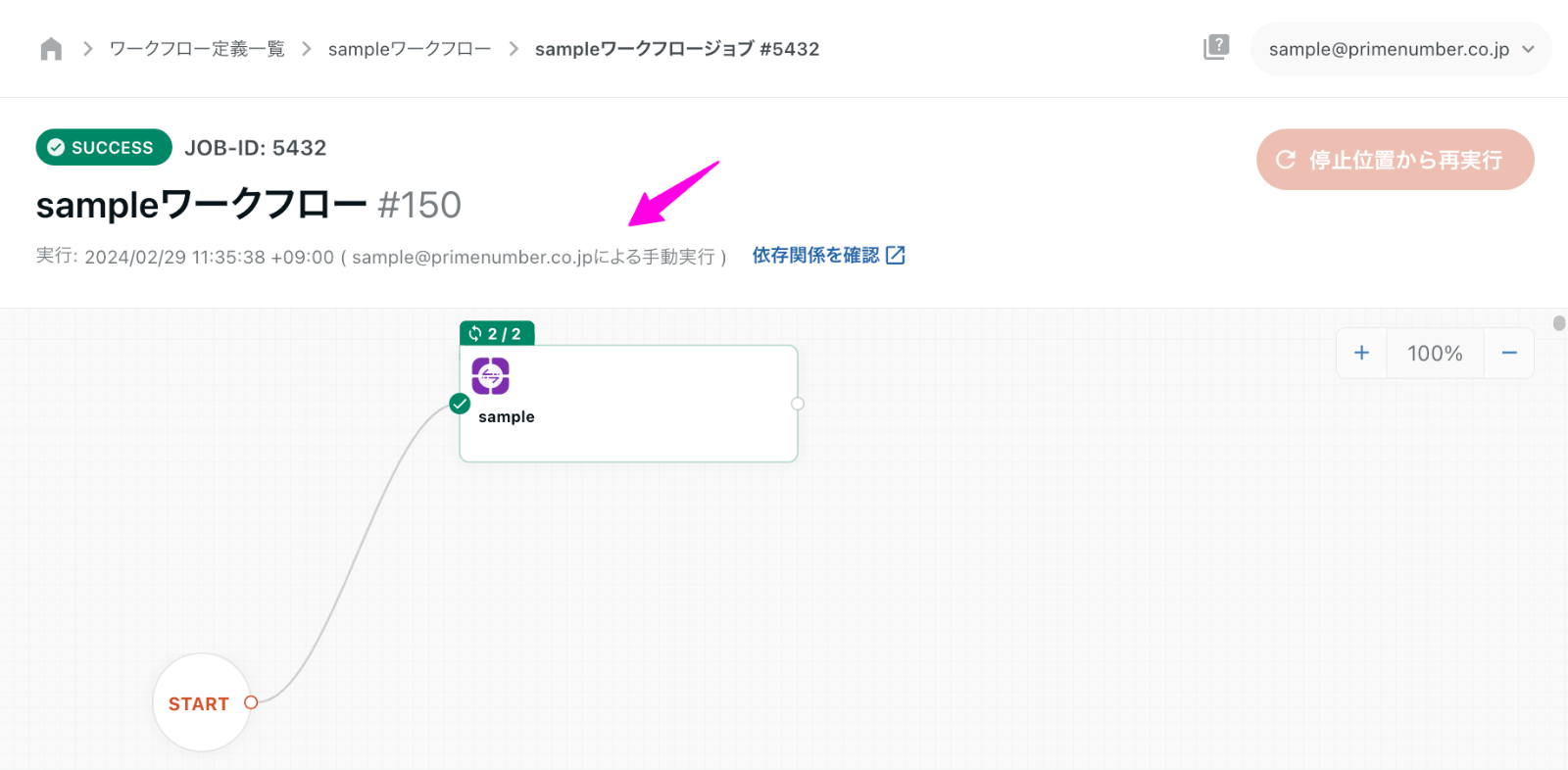

Manually Executed Workflow Jobs to show the user who executed them

Previously, when a Workflow Job was manually executed, it was not indicated which user executed it.

With this change, the email address of the user who executed the following cases is now displayed.

- When executed by clicking the Execute button on the Workflow definition details screen

- When executed by clicking Re-Execute from the stop position on the Workflow Job Details screen .

To display a link to the Workflow Job from which it was run

Previously, Workflow Jobs that were executed as tasks of another Workflow Job did not indicate by which Workflow Job they were executed.

With this change, a link to the Workflow Job from which it was run is now displayed.

2024-02-26

ETL Configuration

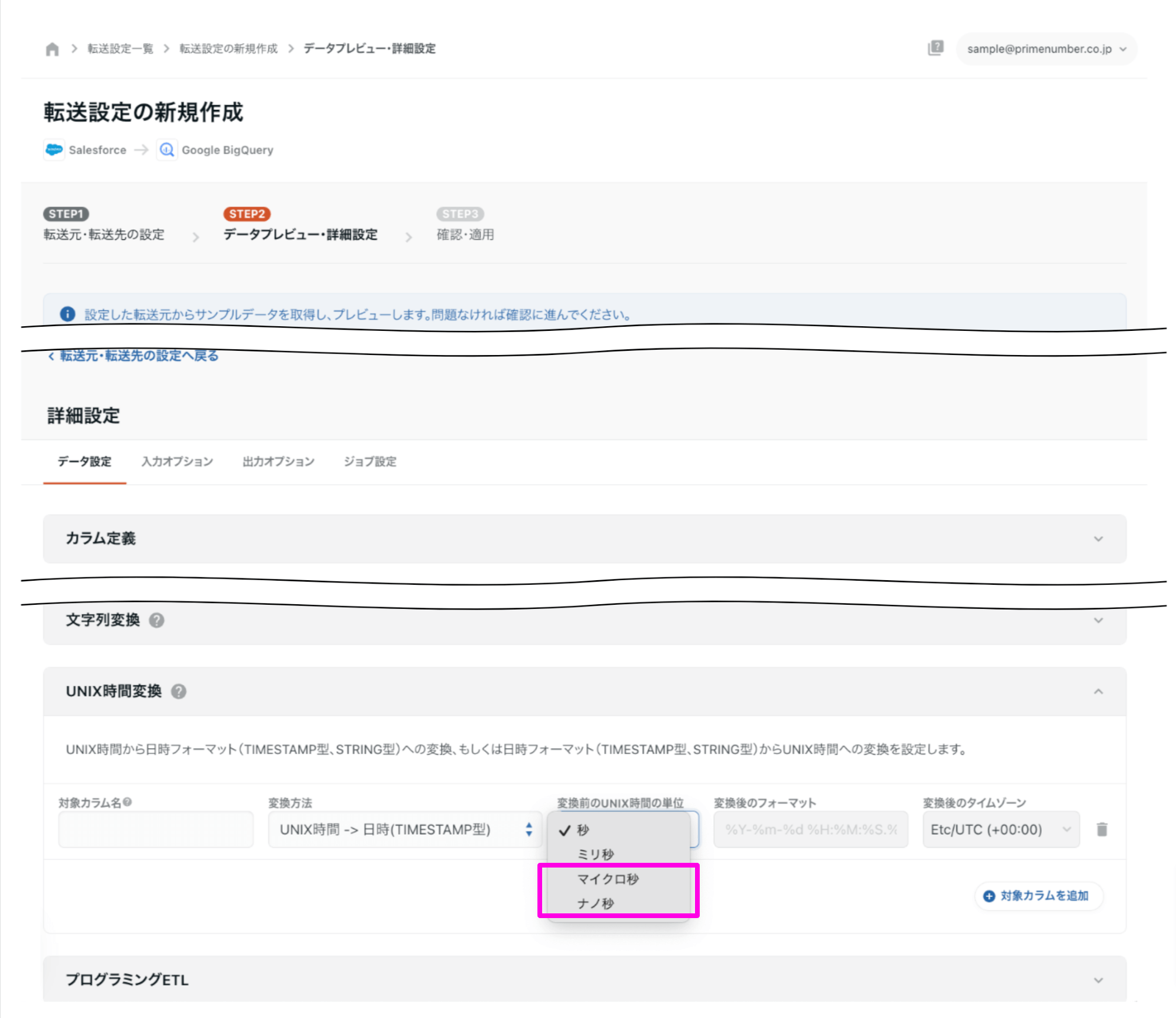

Microseconds and nanoseconds added to time units for UNIX Time conversion.

The number of UNIX Time units that can be handled in UNIX Time conversion in ETL Configuration STEP 2 has been expanded.

Microseconds and****nanoseconds can now be selected as the unit of UNIX Time conversion before and after conversion.

For more information, see UNIX Time conversion.

2024-02-19

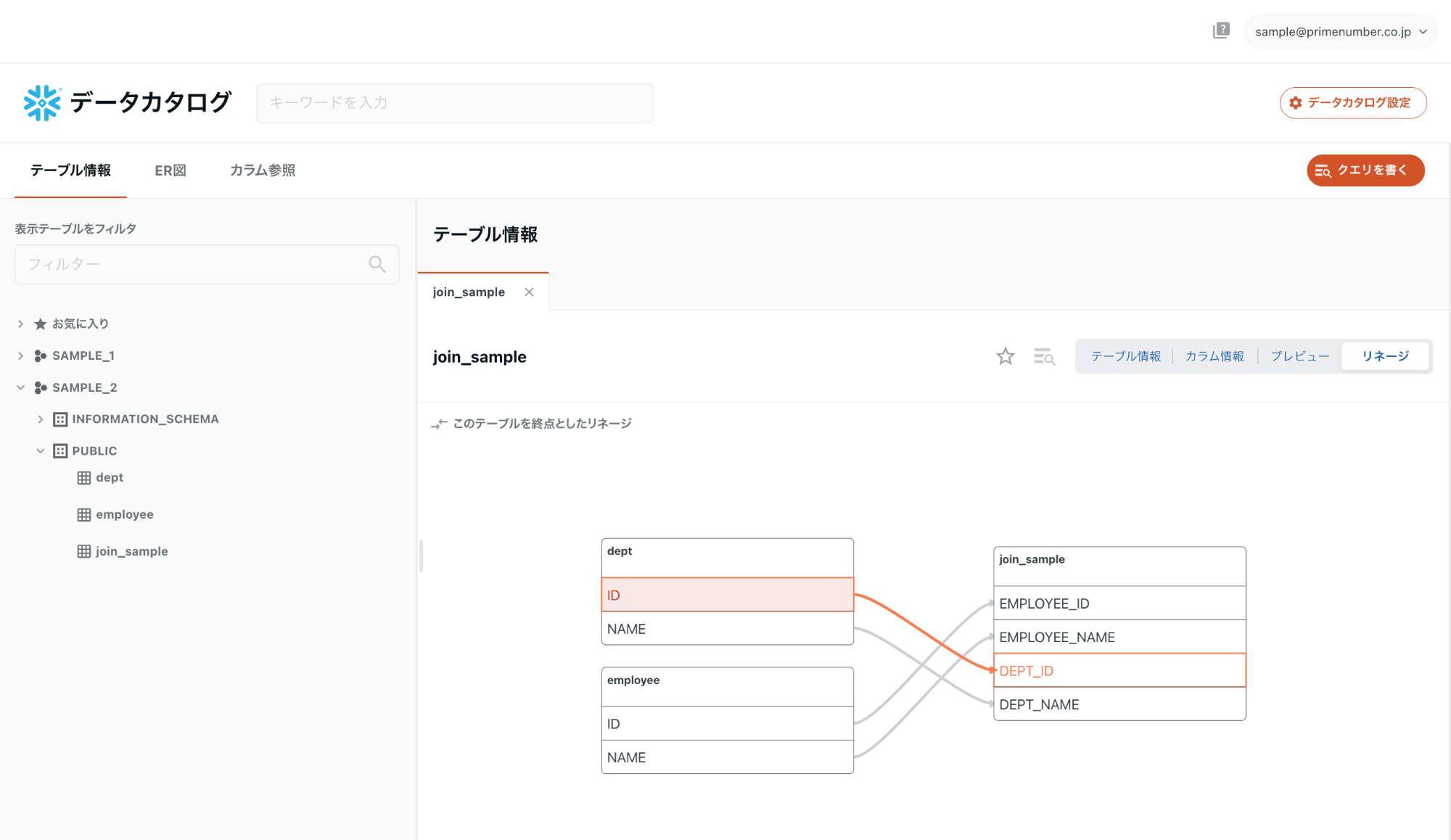

Data Catalog

Snowflake version of Data Catalog supports "Automatic Metadata Inheritance" and "Column Lineage".

Until now, automatic metadata takeover andcolumn linage for Data Catalogs were supported only in the Google BigQuery version.

With this change, the same functionality is now available in the Snowflake version of the Data Catalog.

ETL Configuration

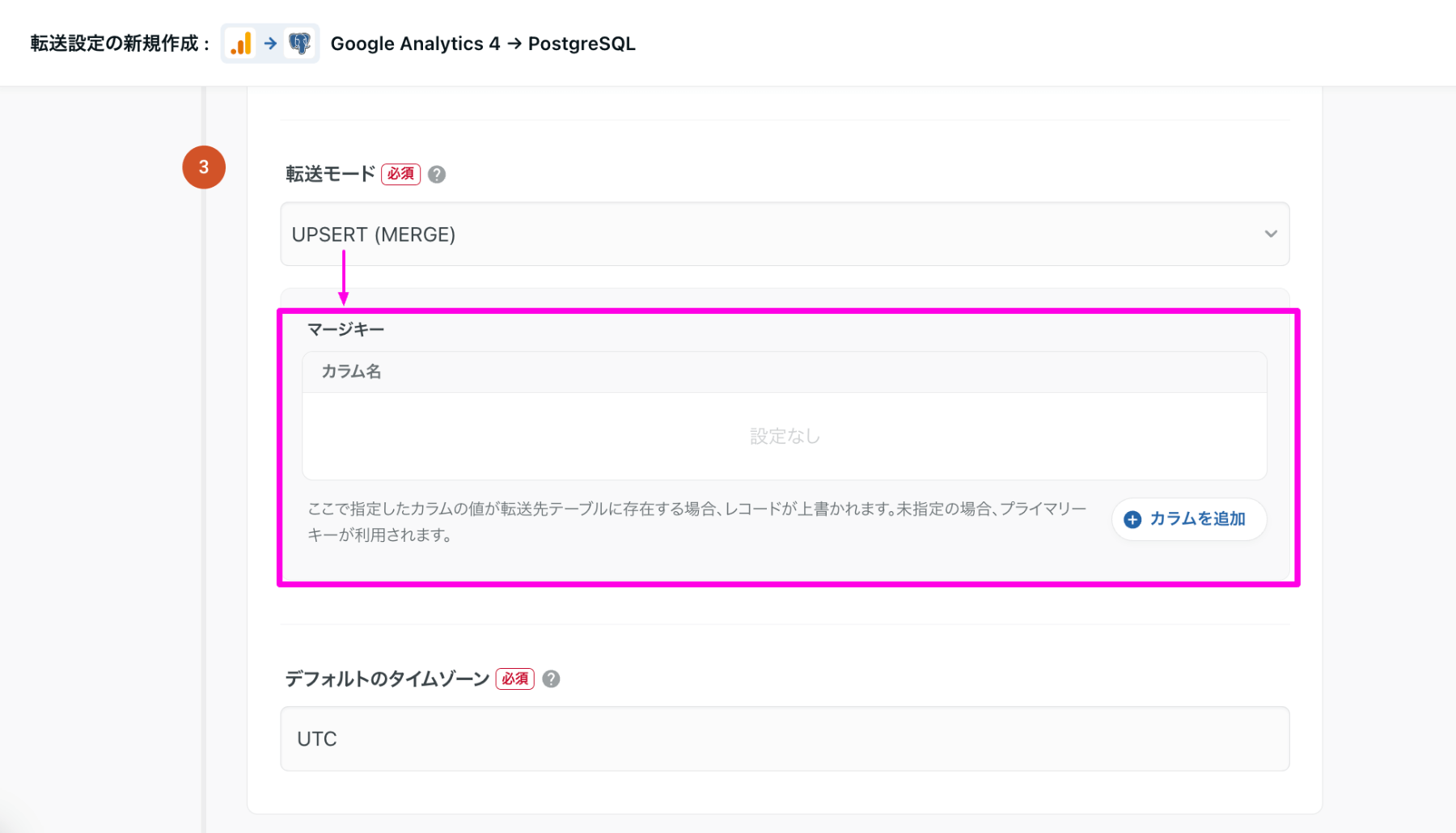

Changed how to set merge key for Data Destination PostgreSQL.

The method of setting the merge key when the transfer mode is set to UPSERT (MERGE) in ETL Configuration STEP1 has been changed.

Previously, a merge key had to be set in ETL Configuration STEP 2.

With this change, when UPSERT (MERGE) is selected as the transfer mode in ETL Configuration STEP 1, the Merge Key setting item will appear directly below.

API Update

Data Source Shopify

The version of the Shopify API used for transfers has been updated from 2023-01 to 2024-01.

Please refer to the documentation in the Shopify API reference docs for the new version.

2024-02-13

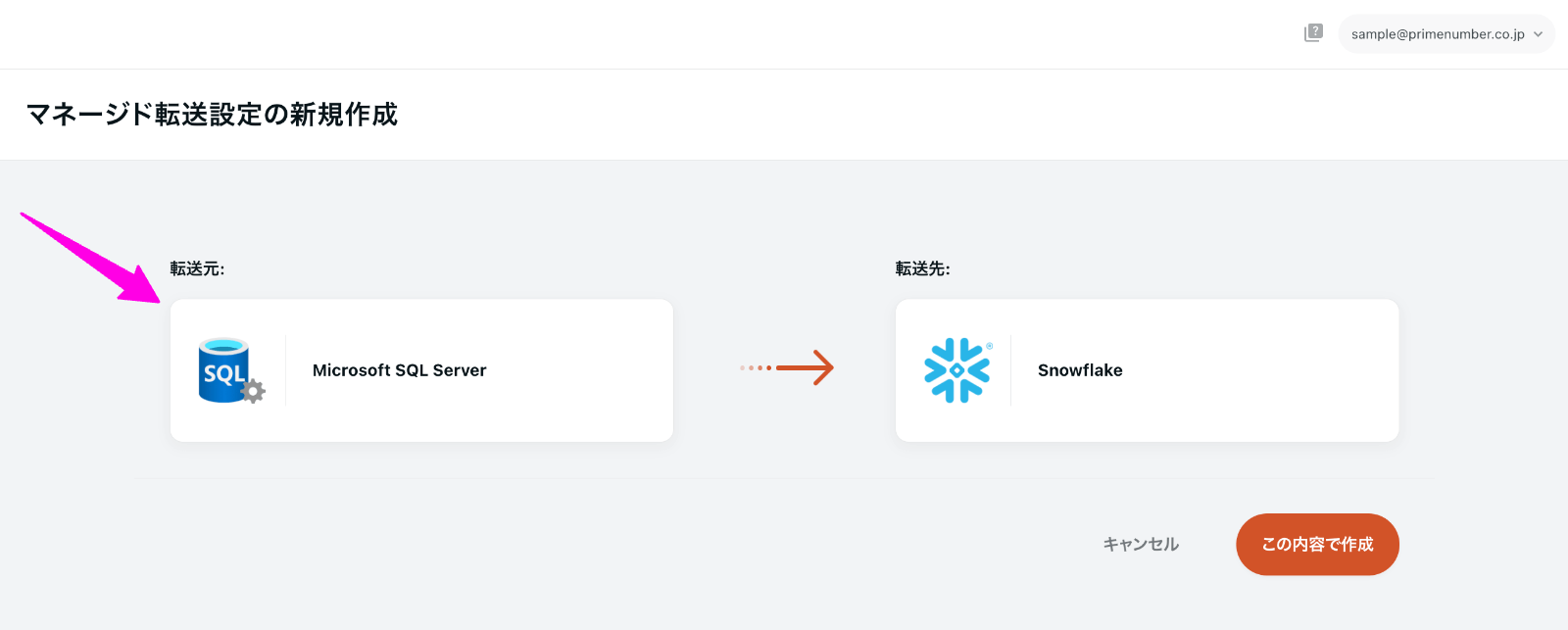

Managed ETL

Add Microsoft SQL Server as Data Source

Microsoft SQL Server can now be selected as the Data Source for Managed ETL.

Microsoft SQL Server tables can be imported in bulk, and the associated ETL Configuration can be created centrally.

See Managed ETL Configuration > Data Source Microsoft SQL Server for various entry fields.

ETL Configuration

Expanded columns of master data for ads that can be retrieved from Data Source LINE Ads.

Added small_delivery to the column of data retrieved as master data for ads.

Master data for advertisements can be obtained when Master Data (Advertisements) is selected for the Download Typeand Advertisements is selected for the Master Data Type in STEP 1 of ETL Configuration.

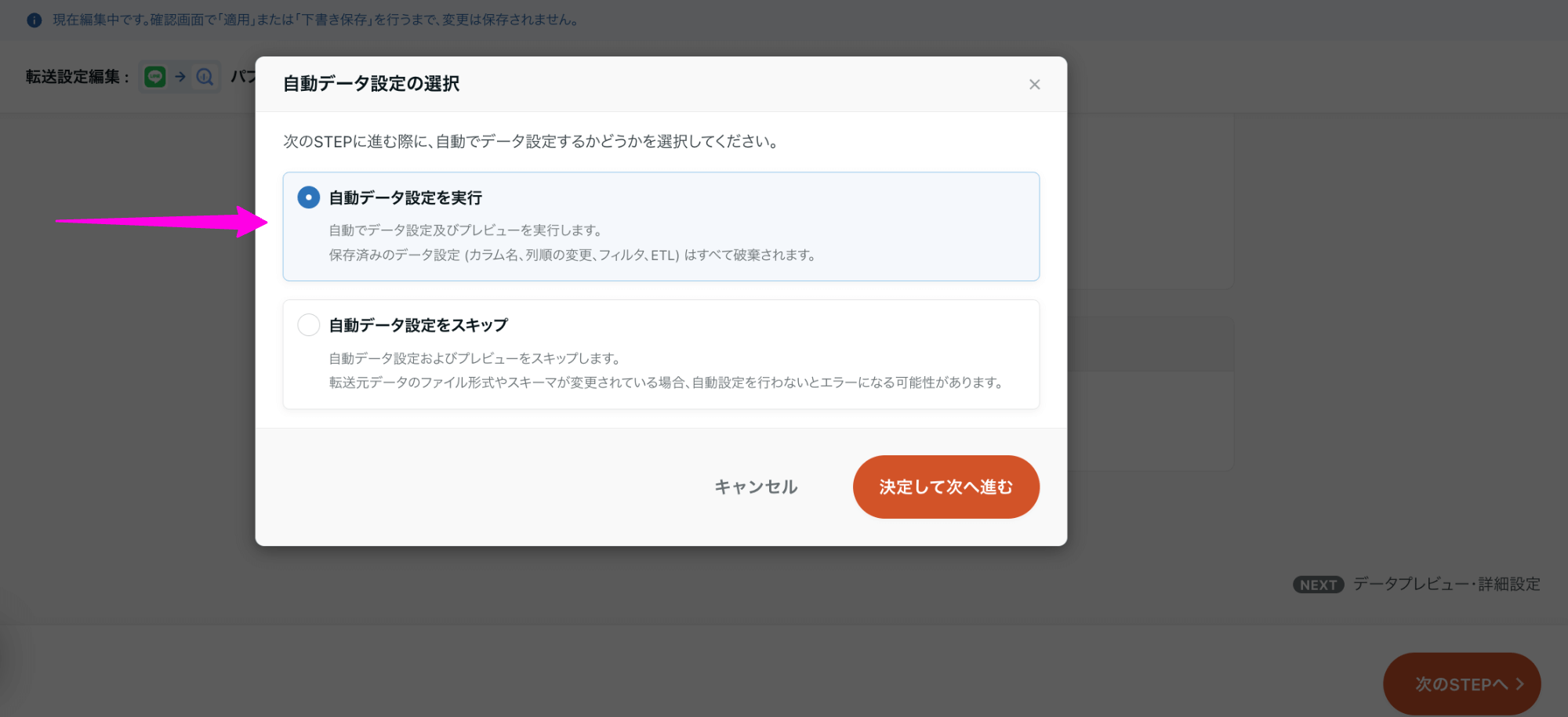

Note that to incorporate the small_delivery column in an existing ETL Configuration, you must edit the ETL Configuration and run the Automatic Data Setting.

Select "Execute Automatic Data Setting" on the screen that appears when moving from STEP1 to STEP2 of the Edit ETL Configuration screen, and save it.

2024-02-05

ETL Configuration

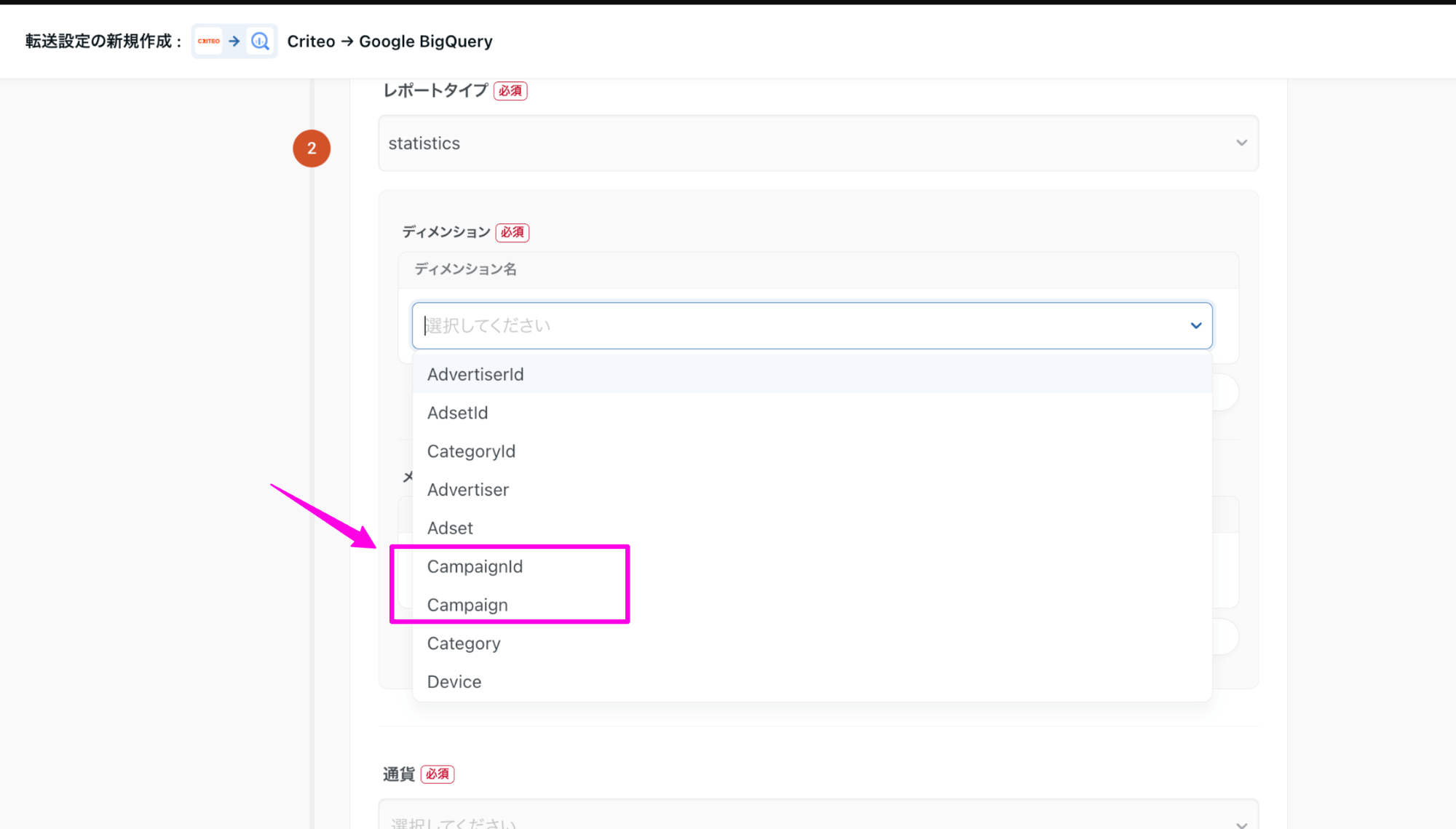

Expanded the types of dimensions that can be specified in Data Source Criteo

CampaignId and``Campaign can now be selected in Dimension Name in ETL Configuration STEP 1.

Dimension name is an item that appears when statistics is selected as the report type.

API Update

Search Ads and Data Source Yahoo! Search Ads and Data Source Yahoo! Display Ads (managed)

The version of YahooAdsAPI used for transfer has been updated from v10 to v11.

Please refer to the YahooAdsAPI | Developer Center documentation for information on the new version.

Due to an API update, the old indicator has been discontinued.

From now on, if a column containing "(old)" is specified in the column name, the new column will be automatically obtained.

2024-01-29

ETL Configuration

Data Destination Snowflake supports schema tracking.

Data Destination Snowflake now supports schema tracking.

Schema Tracking is a function that automatically corrects the schema of the Incremental Data Transfer table and resolves the schema difference between the Data Destination and the Connector's table.

From now on, it will no longer be necessary to manually modify the schema on the Snowflake side in the event of a difference in the above schema.

UI・UX

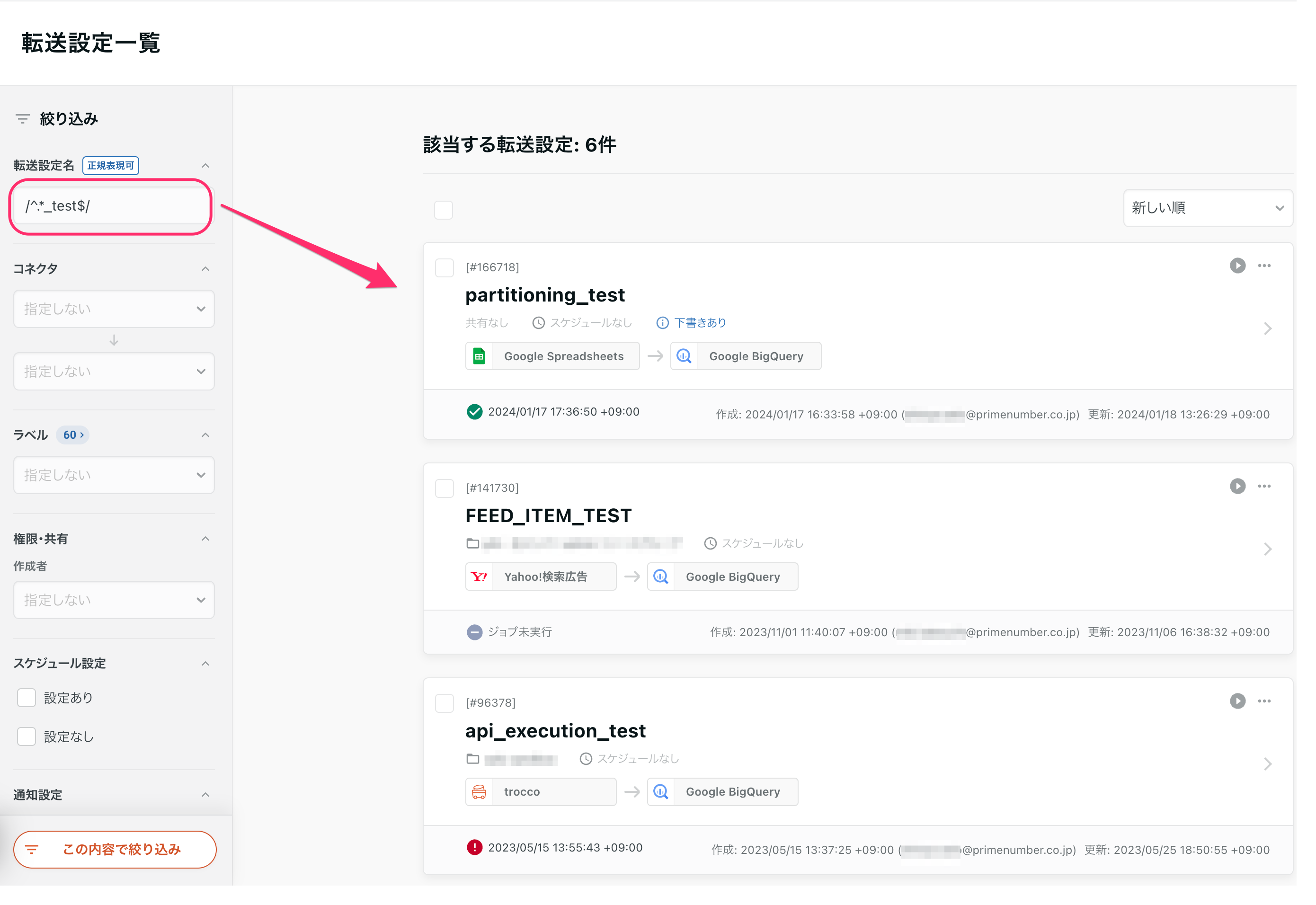

ETL Configuration list can be filtered by regular expression.

The ETL Configuration list can now be narrowed down by regular expression.

See Filtering ETL Configuration Names with Regular Expressions for more information on the notation of regular expressions that can be entered.

Time Zone Configuration values are now applied by default when creating Managed ETL Configuration.

The time zone value specified in the Time Zone Configuration is now entered by default in the time zone value selected in STEP 1 when creating the Managed ETL Configuration.

API Update

Data Destination Google Ads Conversions

The version of Google Ads API used during transfer has been updated from v13.1 to v14.1.

Both offline and****extended conversions have been updated.

Please refer to the Google Ads API documentation for information on the new version.

2024-01-22

Managed ETL

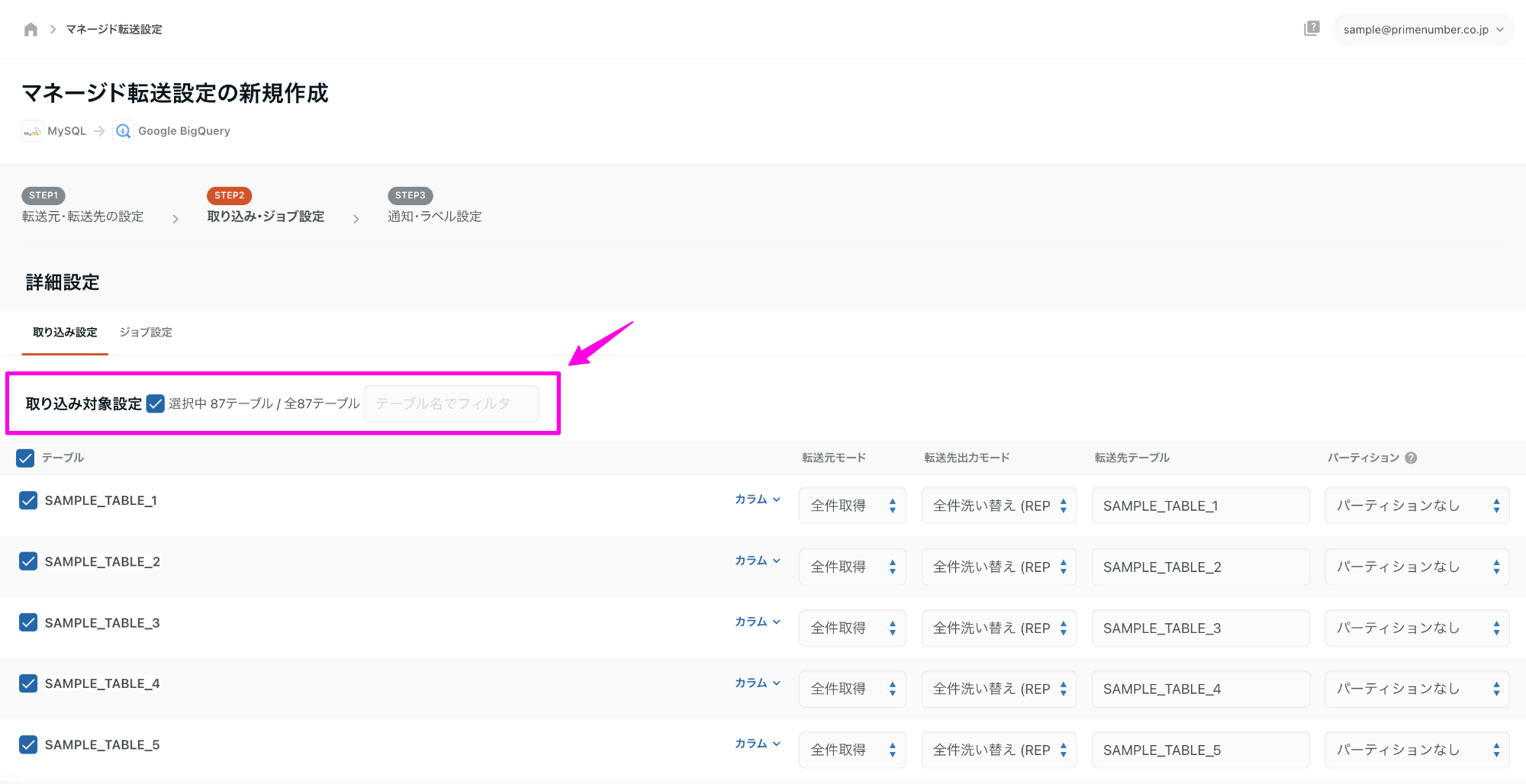

Enabled bulk selection and deselection of tables and filtering of table names

Previously, only pagination units (up to 100 tables/times) could be selected.

This change allows for batch selection and batch de-selection regardless of pagination.

In addition, it is now possible to filter by table name.

This change will be applied to the following screens.

- New creation STEP2

- List of unadded tables

- Check Created/Dropped Tables

ETL Configuration

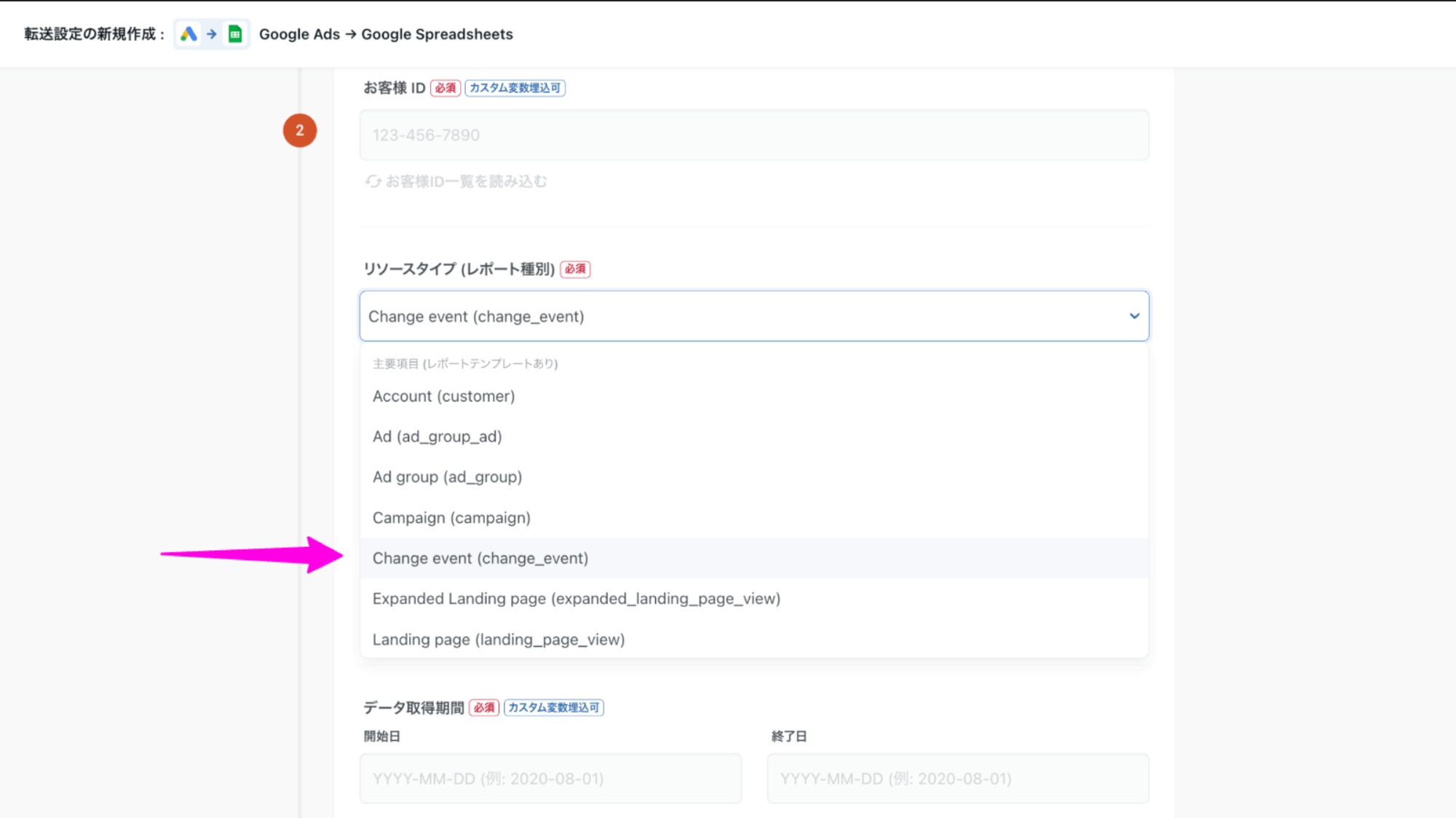

Added "Change event" to the resource type of Data Source Google Ads.

Change event (change_event) has been added to "Resource Type (Report Type)" in STEP 1 of ETL Configuration.

You can now get a report of changes that have occurred in your account.

For more information on change_event, please refer to the Google Ads API documentation.

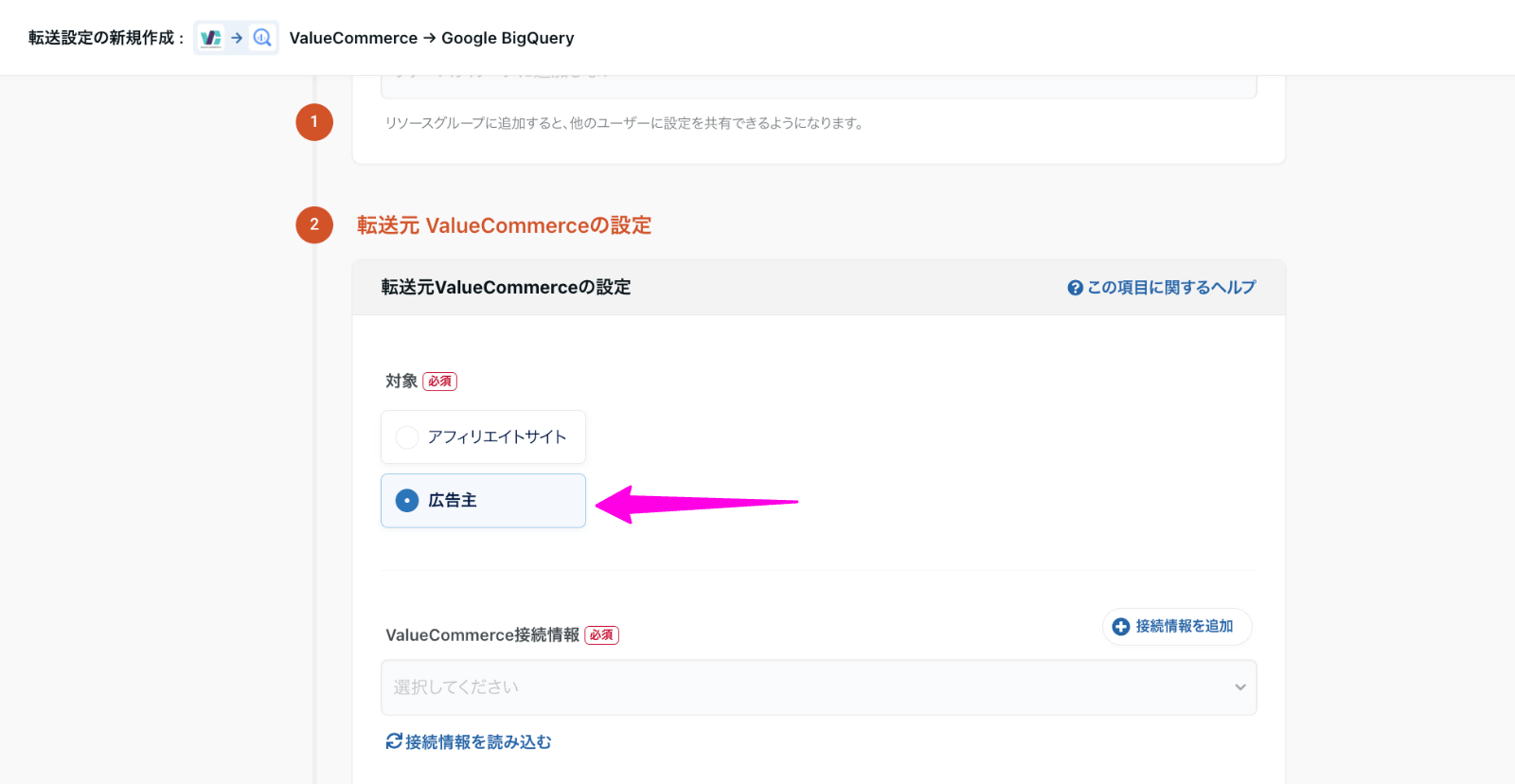

Data Source ValueCommerce to get reports for advertisers.

Previously, only affiliate sites were eligible to obtain reports.

With this change, advertiser reports can also be retrieved.

For more information, see Data Source - ValueCommerce.

UI・UX

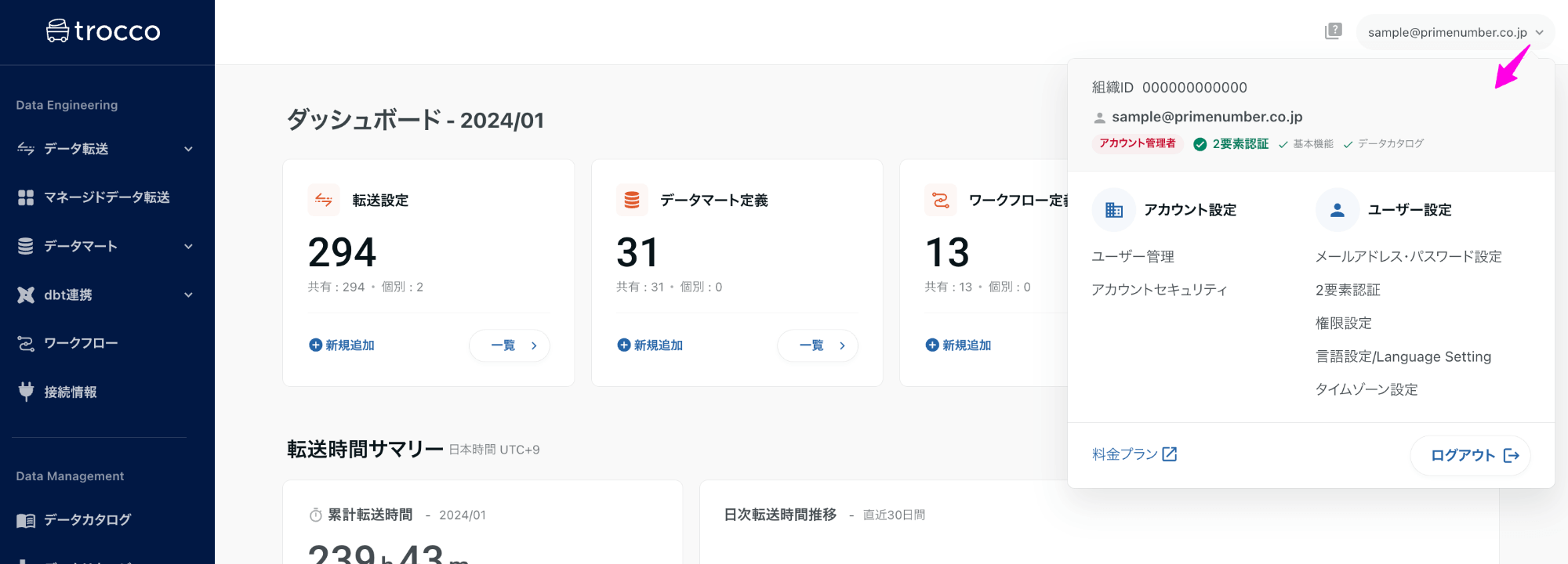

Redesigned pop-up menu in the upper right corner of the screen

The design of the pop-up menu that appears when the user clicks on his/her own e-mail address area has been redesigned.

In addition to being able to check the organization ID and own privileges, users can now move to various settings related to accounts and users with a single click.

In addition, links to the following pages have been moved from the pop-up menu to the sidebar on the left side of the screen with this change.

- GitHub access token (under external collaboration )

- TROCCO API Key (under External Linkage )

- Audit log output

API Update

Data Source Google Ads / Data Destination Google Ads Conversions

The version of Google Ads API used during transfer has been updated from v13.1 to v14.1.

Please refer to the Google Ads API documentation for information on the new version.

2024-01-15

ETL Configuration

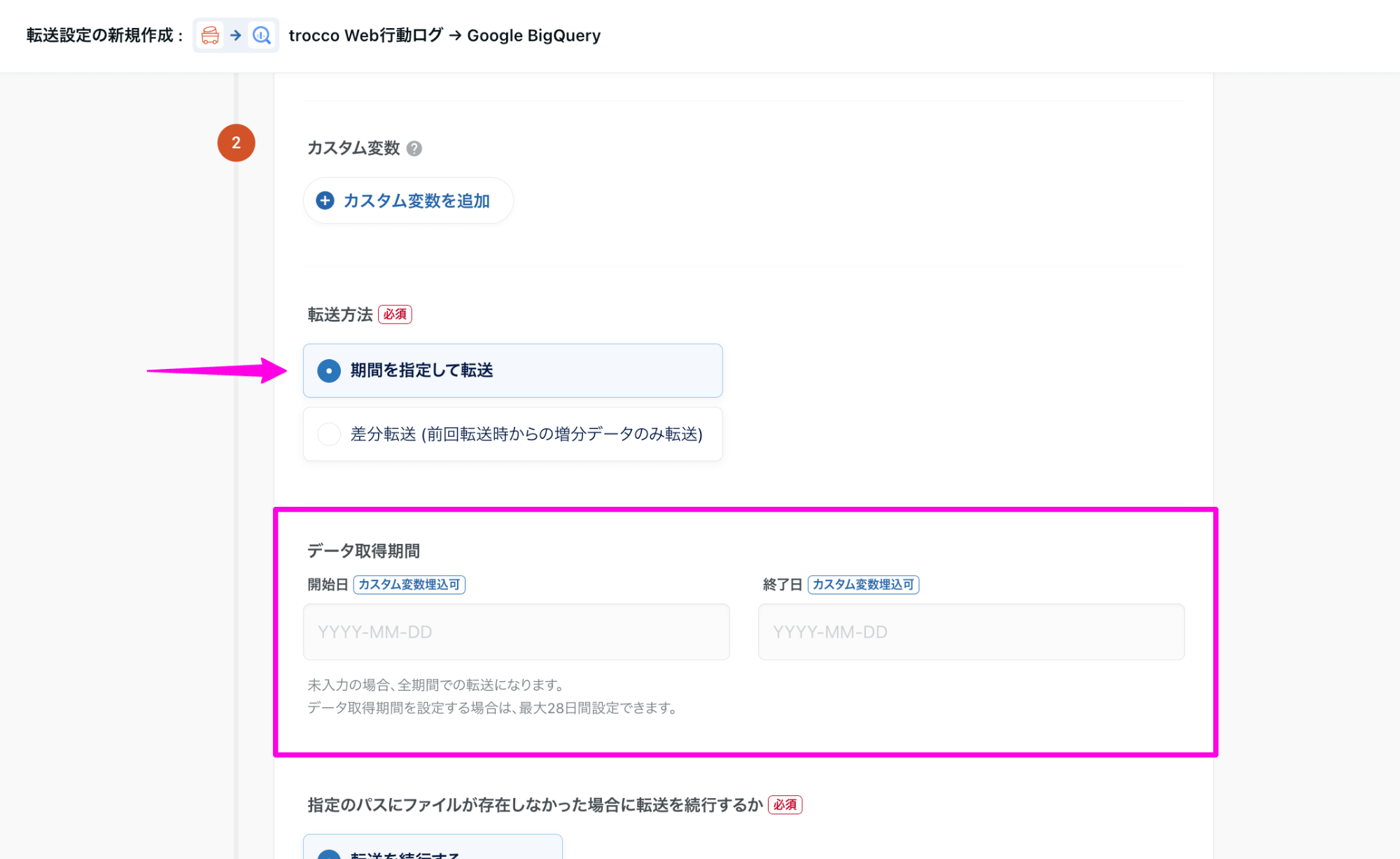

Data Source TROCCO Web Activity Log data acquisition period can be specified.

Data Retrieval Period can now be specified in ETL Configuration STEP1.

TROCCO Web Activity Log data can be retrieved for any time period by specifying a start and****end date.

For more information, see Data Source - TROCCO Web Activity Log.

Connection Configuration

HTTP/HTTPS Connection Configuration using Client Credentials can be created.

HTTP/HTTPS Connection Configuration, Grant Type can now be selected from Authorization Code or****Client Credentials.

Previously, the grant type was fixed and was an authorization code, but with this release, Client Credentials can now also be selected.

For details, please refer to the HTTP/HTTPS Connection Configuration.

Data Catalog

Changed specifications for importing partitioned tables

In Google BigQuery Data Catalog, the specification to retrieve partitioned tables as catalog data has been changed.

From now on, for partitioned tables, only the table with the latest date will be retrieved as catalog data.

Previously, all segments in a partitioned table were obtained as catalog data.

Because each segment was considered a separate table in the Data Catalog, there were multiple hits for essentially the same table when searching for tables, and manual metadata entry operations such as basic metadata and user-defined metadata were difficult.

From now on, only tables with the most recent dates will be retrieved, making tables more searchable and facilitating the operation of manual metadata entry.