Hello! Release information for October 2022!

Data Catalog

Supports metadata CSV import 🎉.

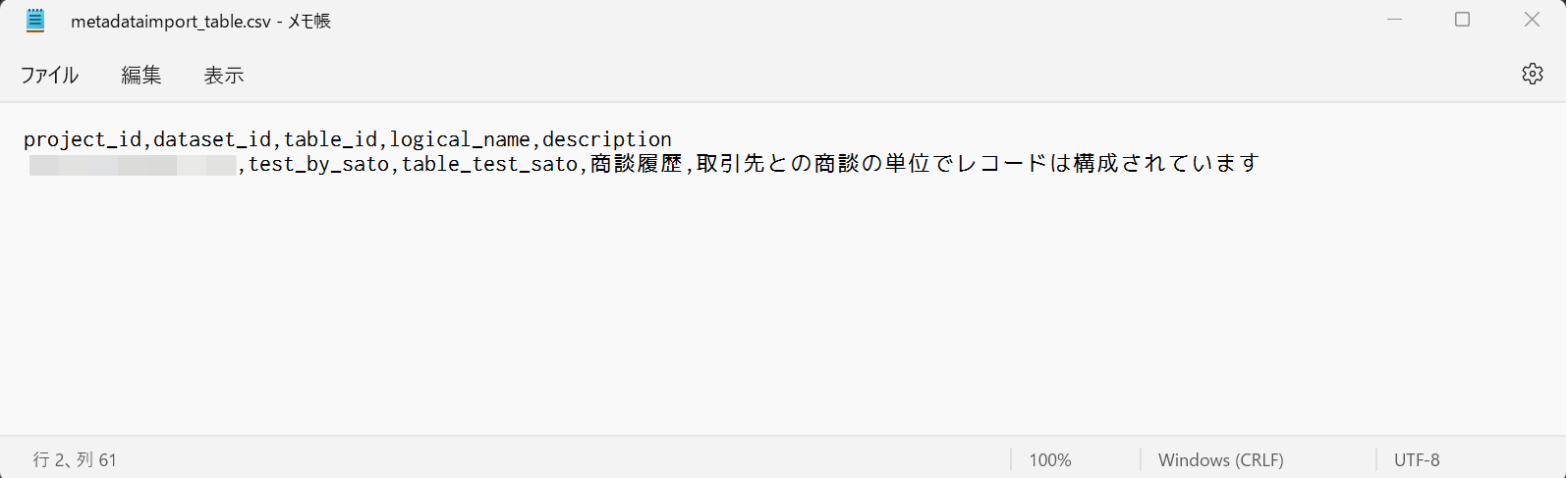

Basic metadata values can now be imported using CSV files 🎉.

Below is a brief overview of the import process.

See Metadata Import for details on CSV file format and usage restrictions.

-

Prepare a CSV file according to the format.

-

Click on Data Catalog Settings > Metadata Import.

-

Select the import target and upload/import the CSV file.

-

Upon successful import, the basic metadata values are overwritten as follows

Enhanced summary statistics display

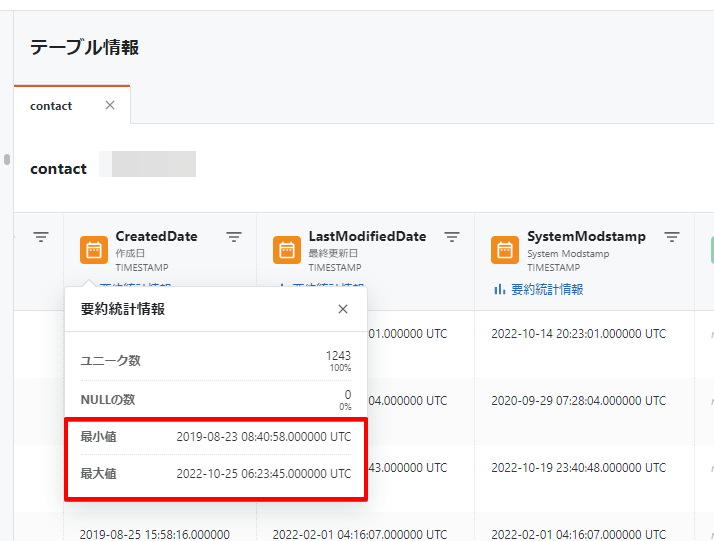

Minimum and maximum values for date and time related types are now displayed.

The summary statistics can be viewed in "Column Information" and "Preview" of the table information.

Connection Configuration

Support for connection to Oracle Autonomous Database

Wallet files can now be uploaded when " Use tnsnames.ora file" is selected under "Connection Method" in the Oracle Database Connection Configuration.

Uploading the wallet file will enable the connection to the Oracle Autonomous Database.

ETL Configuration

Data Destination BigQuery Table Split Partition Enhancements🎉.

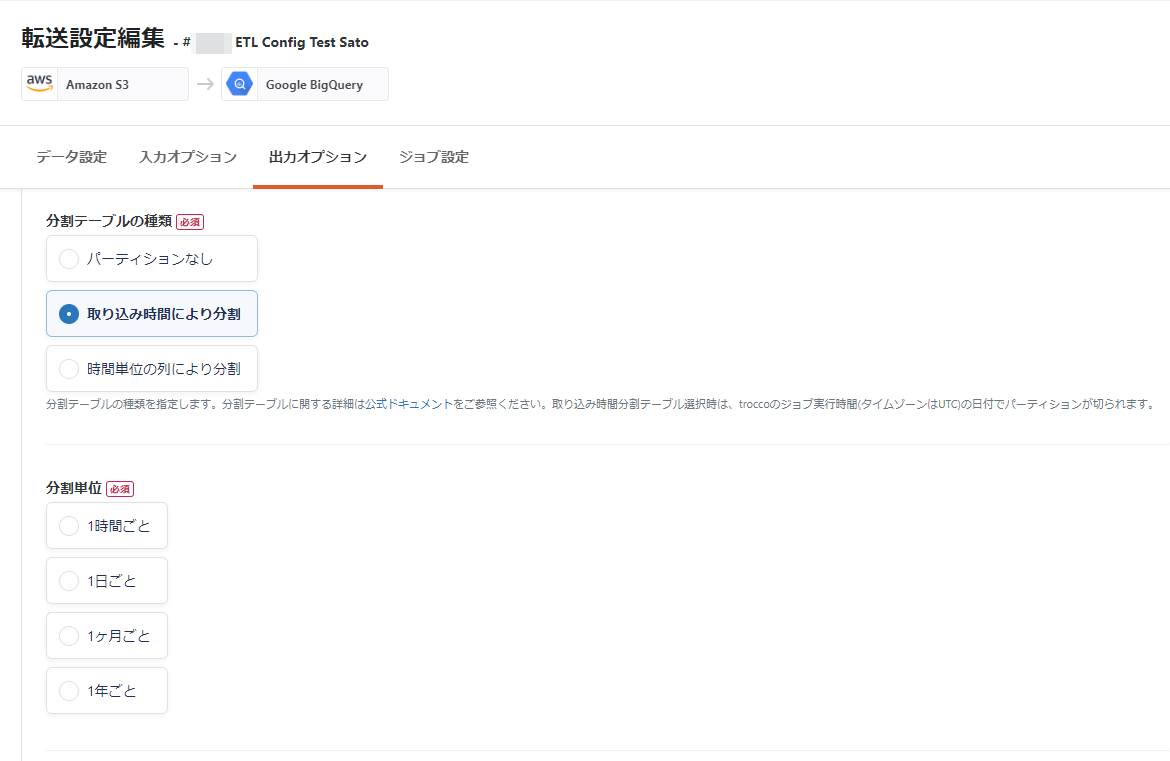

In STEP2 "Output Option" of ETL Configuration of Data Destination Google BigQuery, you can now more precisely set the time unit that is the basis for the table partitioning partition.

- With this change, you can now choose from four types of time units (hour, day, month, year) for table division.

- The above four types of time unit table partitioning are supported for both partitioning by****fetch time and by time unit columns.

By dividing tables into smaller pieces, query execution performance can be improved and query execution costs can be reduced.

For more information on split tables, please refer to the Split Table Overview.

workflow

Support for loop execution using Amazon Redshift queries 🎉.

- Loop execution of tasks on a workflow can now be based on Amazon Redshift query results.

- Custom Variable expansion values in loop runs can be set based on Amazon Redshift query results.

- Workflows can be defined in which the expanded value of a Custom Variable changes with each execution.

The following is a brief description of the procedure for setting up loop execution.

-

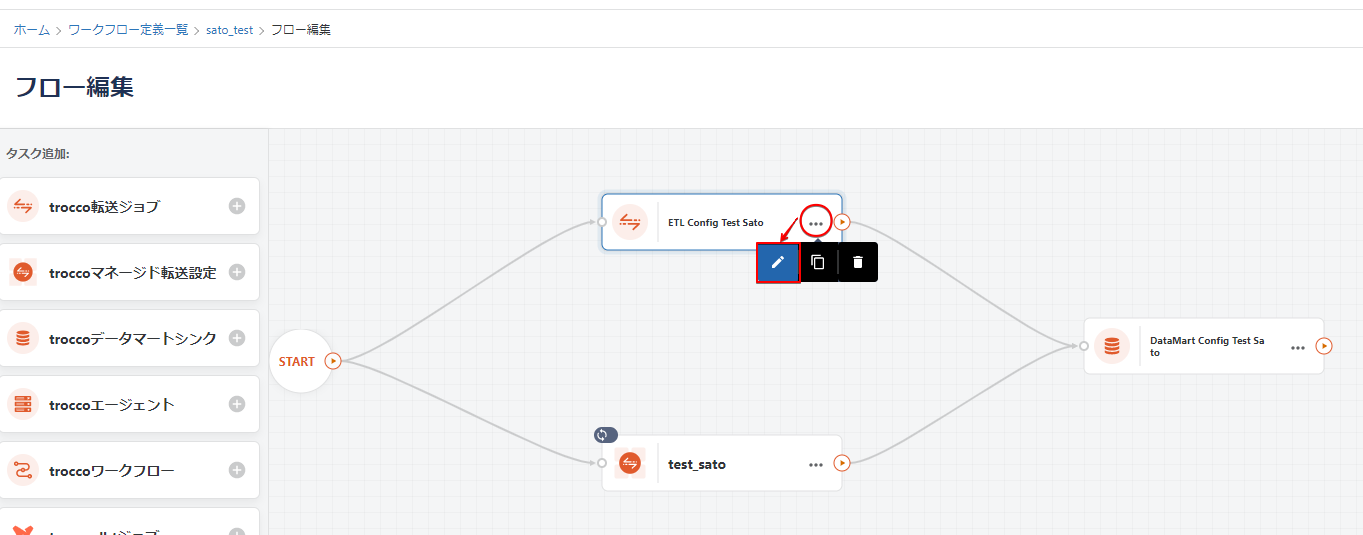

On the Flow Edit screen of the Workflow definition, click the button on the task you want to execute the loop as follows

-

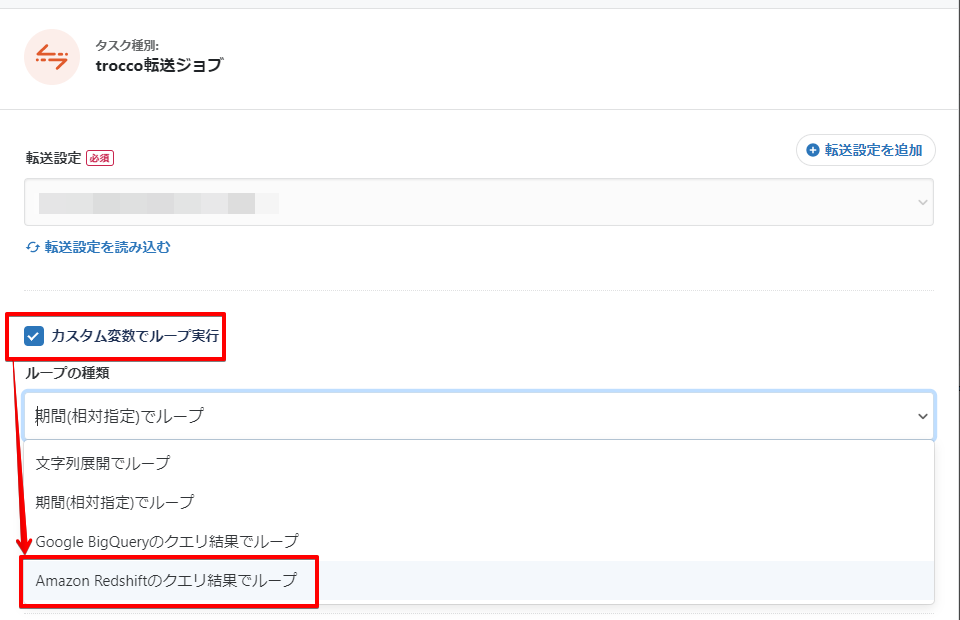

Enable Loop Execution in Custom Variable and select Loop in Amazon Redshift Query Results in Loop Type.

-

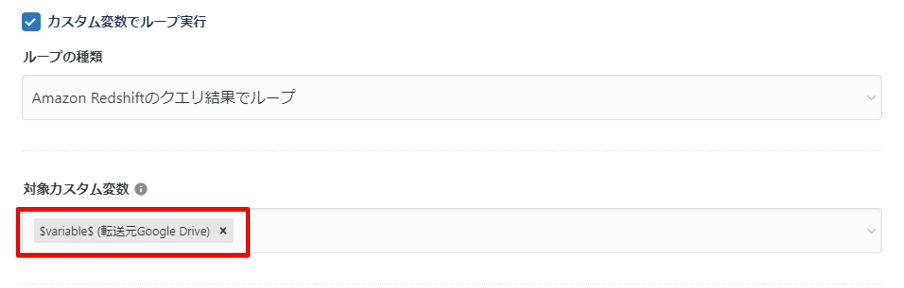

At Target Custom Variable, specify any Custom Variable.

-

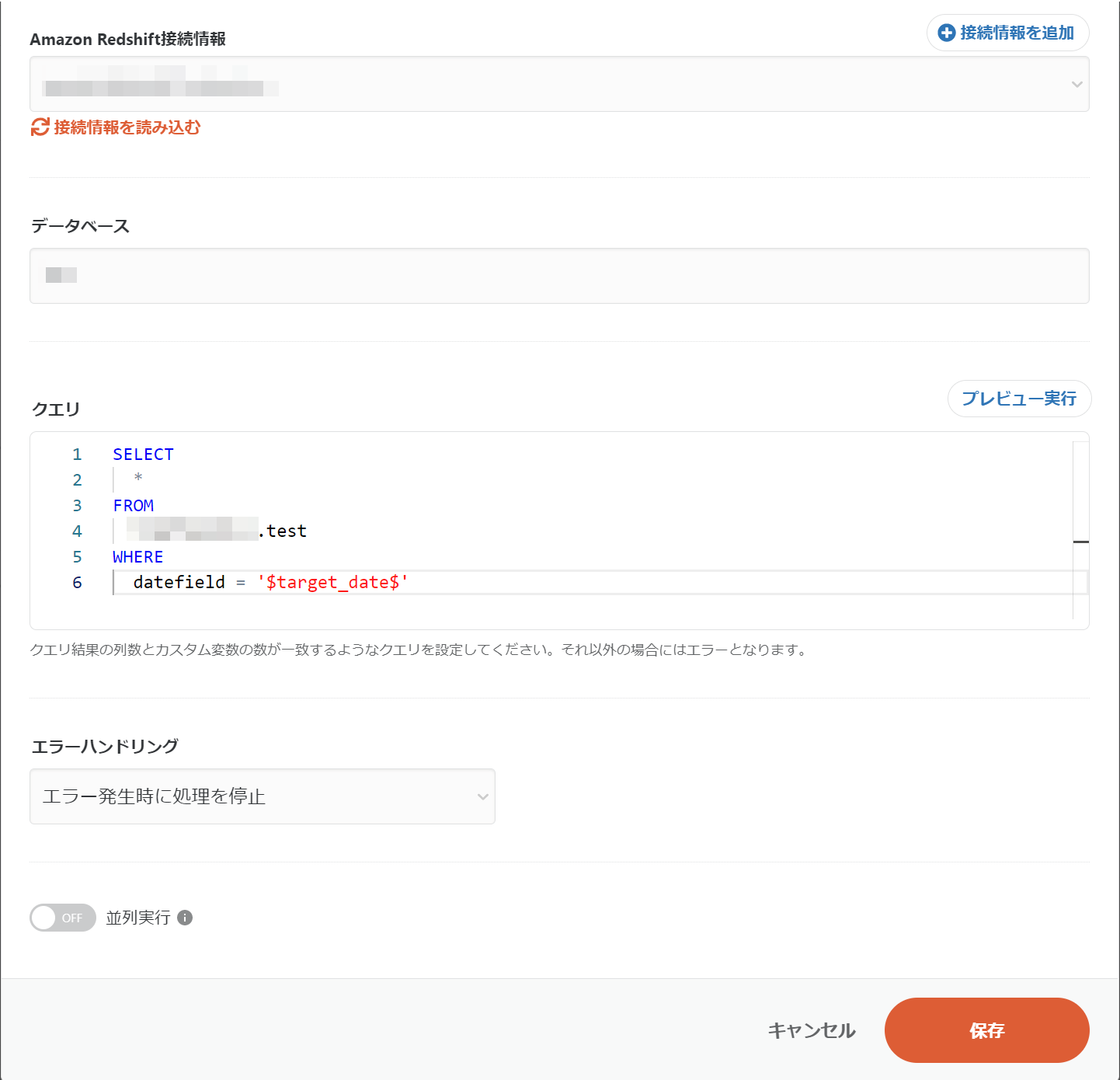

Fill in the various fields and click Save.

**The contents of this release are described above. **

**Please feel free to contact our Customer Success Representative if you have a release you are interested in. **

Happy Data Engineering!