Hello! Release information for 09/2023!

Notice

The method of logging into TROCCO has been changed.

For more information, please refer to the Change of Login Method to TROCCO.

ETL Configuration

Data Destination Facebook Custom Audience(Beta Feature) 🎉

New Data Destination Facebook Custom Audience (Beta Feature).

See Data Destination - Facebook Custom Audience (Beta Feature) for more information on the various input fields and column mappings.

Data Destination File and Storage System Connector supports zip file compression 🎉.

Some Data Destination file/storage system Connectors now support compression of files in zip format.

Zip can be selected for file compression in ETL Configuration STEP 1 with the following Connector as the Data Destination.

- Data Destination Azure Blob Storage

- Data Destination FTP/FTPS

- Data Destination Google Cloud Storage

- Data Destination KARTE Datahub

- Data Destination S3

- Data Destination SFTP

Job Waiting Timeout for ETL Job 🎉 Configuration for Data Source Google BigQuery

In the Advanced Settings of ETL Configuration STEP1, you can specify the timeout period in seconds for waiting for a Job.

When there are many queries running in BigQuery, slot limits may cause jobs to wait until they are executed. If this waiting time reaches the timeout period, the relevant ETL Job will fail.

In such cases, increasing the "Job Waiting Timeout (sec)" will avoid ETL Job failures.

Extend the data types that can be transferred from Data Source Oracle Database 🎉.

Data to be imported from Data Source Oracle Database can now be converted to string type and transferred.

Click on Set Details in ETL Configuration STEP 1, specify the target column name, and select string for the Data Type.

For example, numerical values that had a large number of digits and were missing data during transfer can now be converted to string type and transferred, thereby avoiding missing data.

Data Destination Snowflake's NUMBER type output can specify precision and scale 🎉.

You can specify the precision and scale of the NUMBER type in the Output Option tab > Column Settings > Data Type in ETL Configuration STEP 2.

Use this function to convert data to be transferred to Snowflake to a NUMBER type of any precision and scale.

For more information on the precision and scale of the NUMBER type, please refer to the official Snowflake documentation - NUMBER.

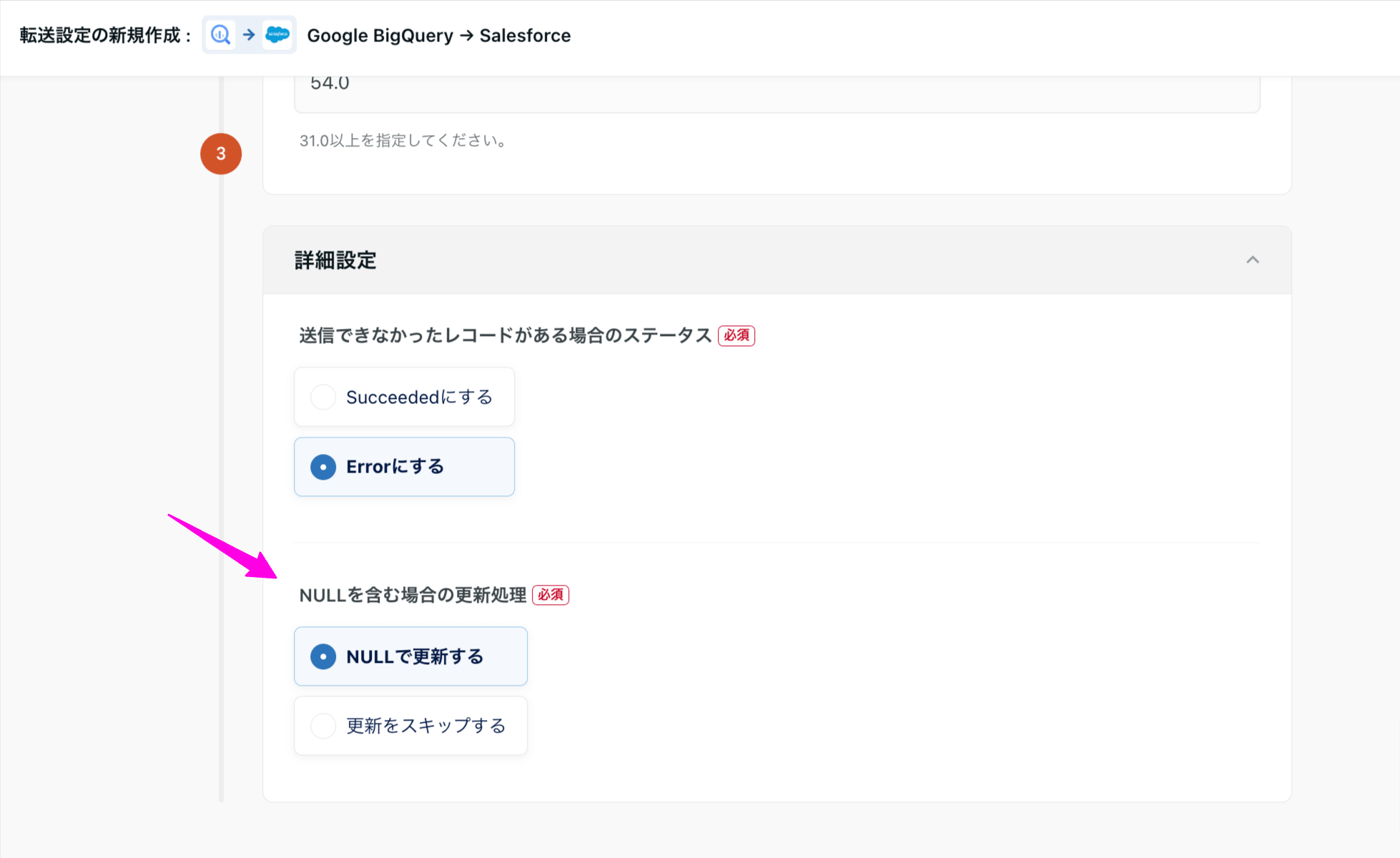

Added Update Processing Configuration for NULL values forwarded to Data Destination Salesforce 🎉.

You can now select the update process when the update data for an existing record in Salesforce contains a null value.

You can choose to update with NULL or****skip updating in the advanced settings of ETL Configuration STEP 1.

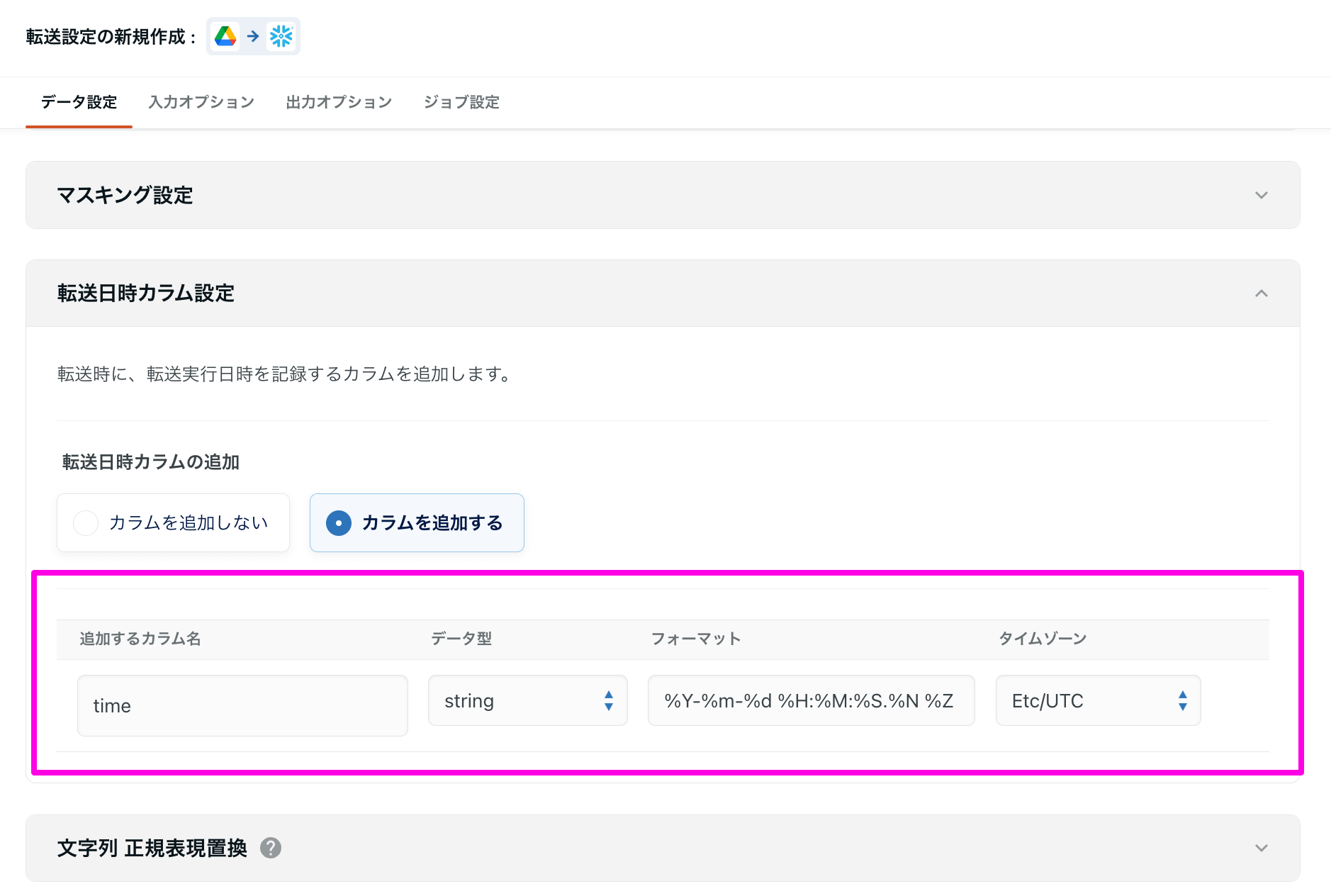

Expanded items for Date/Time columns to be added in Transfer Date Column Setting 🎉.

Transfer Date Column Setting in the Transfer Settings STEP 2 Advanced Settings > Data Setting tab now allows for flexible ETL Configuration.

If the Transfer Date Column Data Typeis set to string, the following items can be ETL Configuration.

- Format: A Specifies the format of the date/time expansion value.

- Time zone Select the time zone to be expanded in the format's time zone specifier from

Etc/UTC or``Asia/Tokyo.

Managed ETL

Schema Change Detection for ETL Configuration can be set in bulk 🎉.

Schema Change Detection for Managed ETL Configuration can now be set in STEP 3 in a batch.

Receive notification of schema changes without having to configure them individually in each ETL Configuration.

Data Mart Configuration

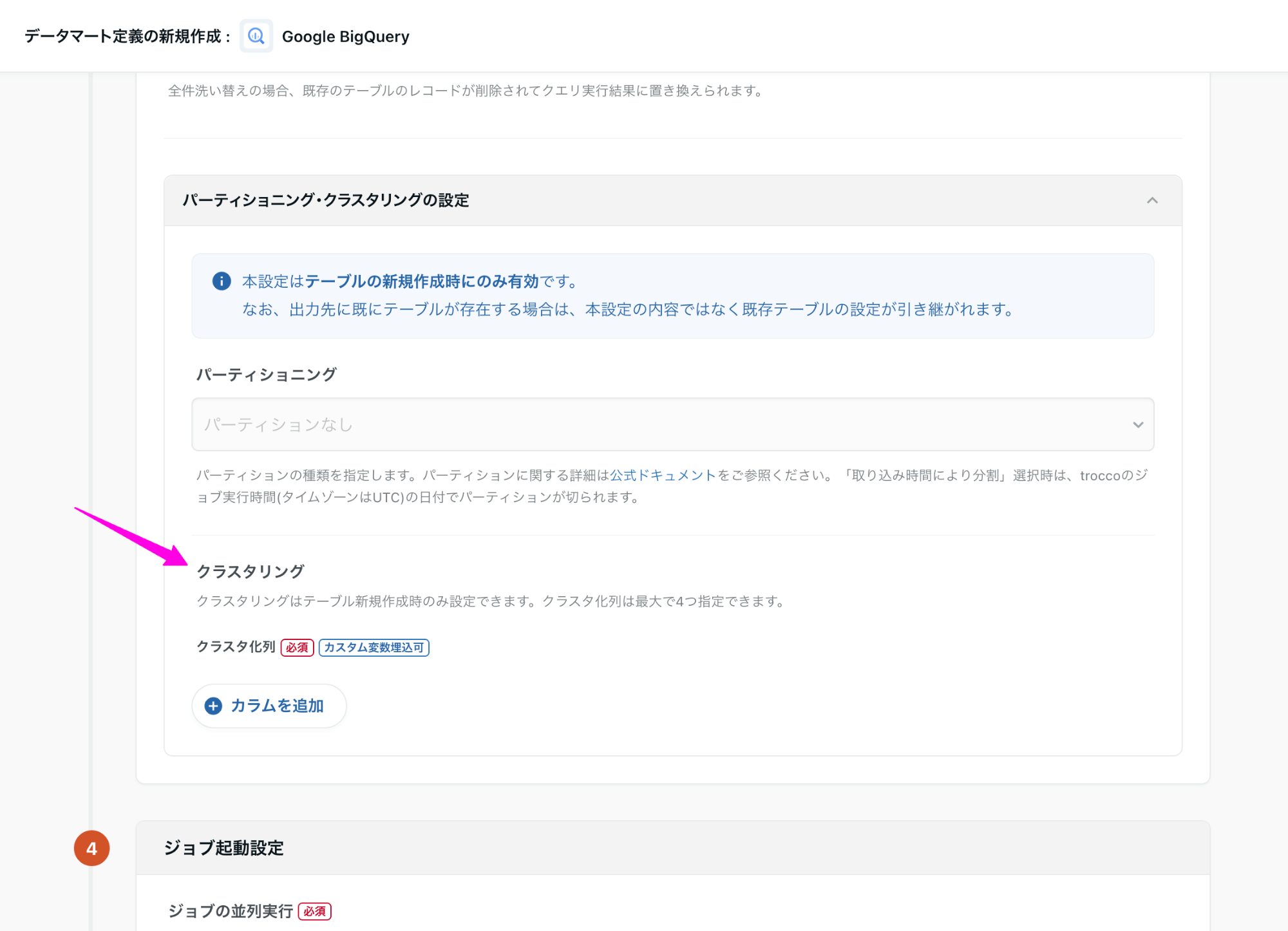

Data Mart Configuration in Google BigQuery allows clustering settings 🎉.

Clustering configuration item was added to Data Mart Configuration in Google BigQuery.

Clustering settings can now be configured for tables newly created by executing a data mart job.

However, if a table already exists in the output destination, the settings of the existing table will be taken over instead of the contents of this setting.

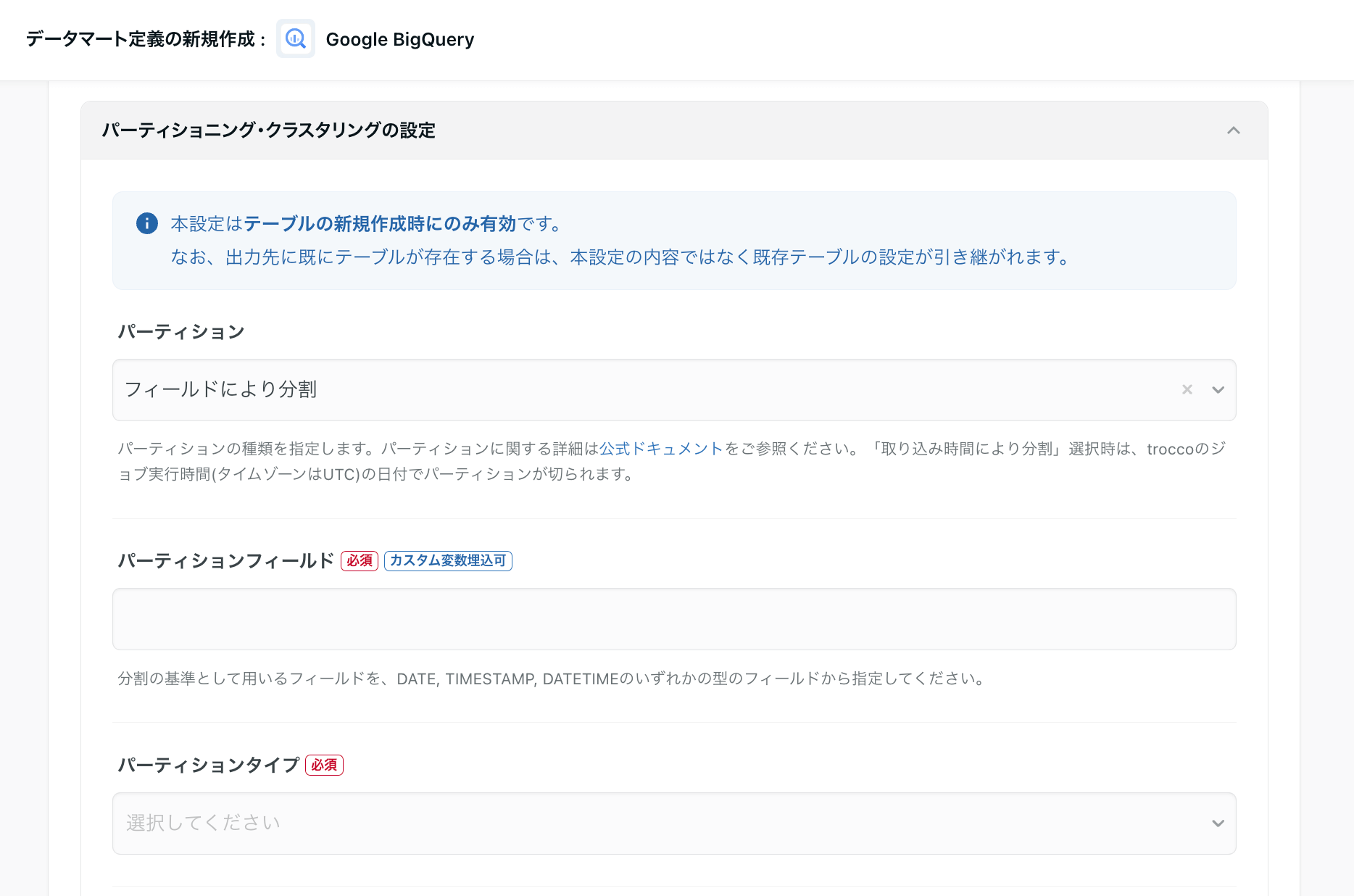

Custom Variable embedding support for Google BigQuery partition fields 🎉.

Custom Variable embedding is now available in the Partition field of Data Mart Configuration in Google BigQuery.

The value of the partition field can be dynamically specified at job execution.

The *Partition field is a setting item that can be entered when partitioning is selected by field.

dbt linkage

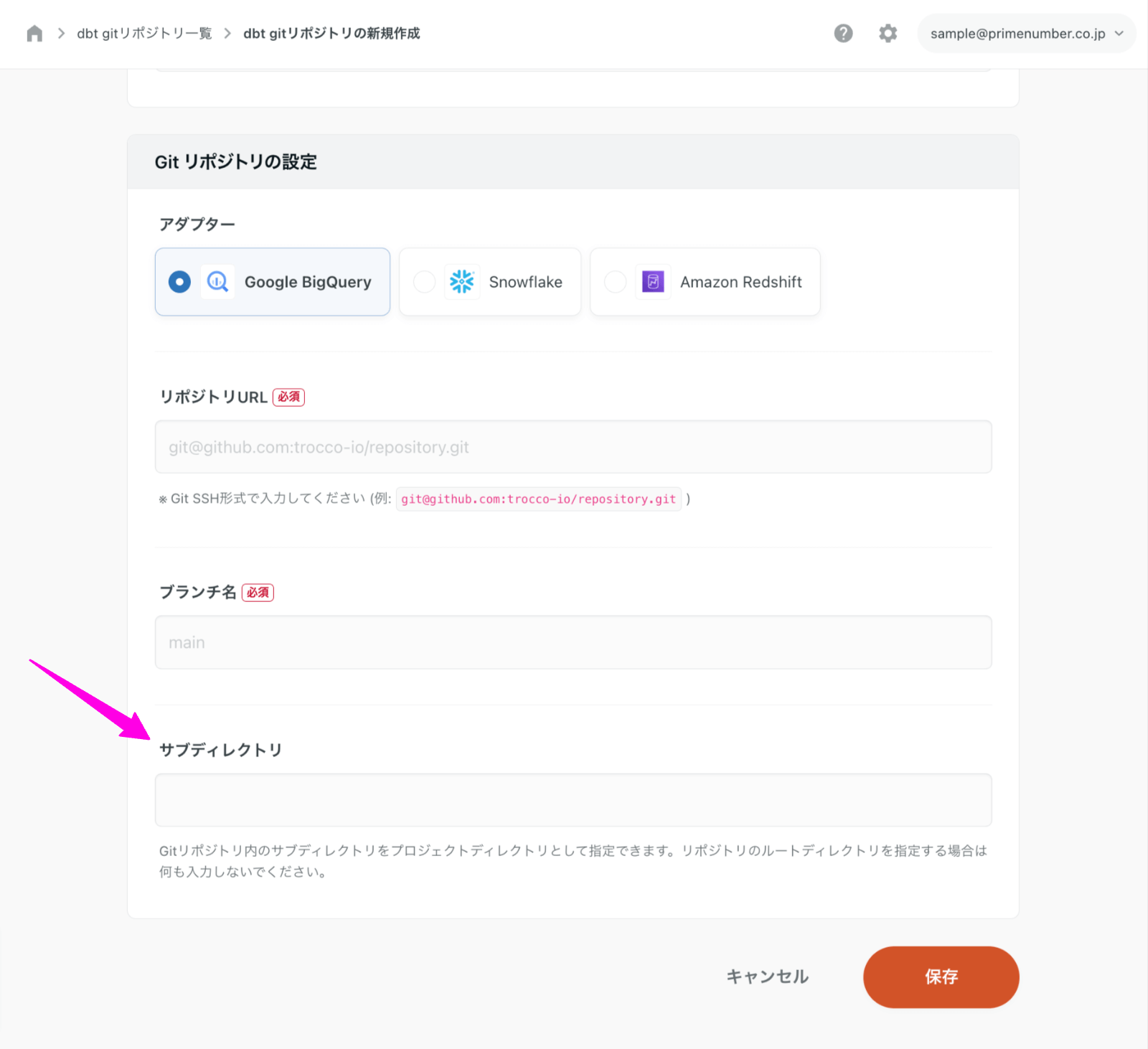

dbt Git repository settings can now specify subdirectories 🎉.

Subdirectories in the Git Integration repository can now be specified as project directories for dbt integration.

Until now, the root directory of the Git repository has been the fixed project directory.

From now on, you can specify any directory in the Git Integration repository as the destination for dbt integration.

Data Catalog

Google BigQuery Data Catalog supports authentication method using service account 🎉.

Service Account (JSON Key)" can now be selected as the authentication method for Google BigQuery Data Catalog.

For details, please refer to the "For First-Time Users" page.

API Update

Data Source Google Ads / Data Destination Google Ads Conversions

The version of Google Ads API used in the transfer was updated from v12 to v13.1.

Please refer to the Google Ads API documentation for information on the new version.

Data Source Facebook Ad Insights

The version of the Facebook API used for transfer has been updated to v17.

Please refer to the Meta for Developers documentation for the new version.

security

Changed the period of time that login status is retained to 48 hours.

For enhanced security, the retention period of login status has been changed to 48 hours.

After 48 hours have elapsed since the last operation of TROCCO, the system enters a logout state.

The next time you access TROCCO, you will need to log in.

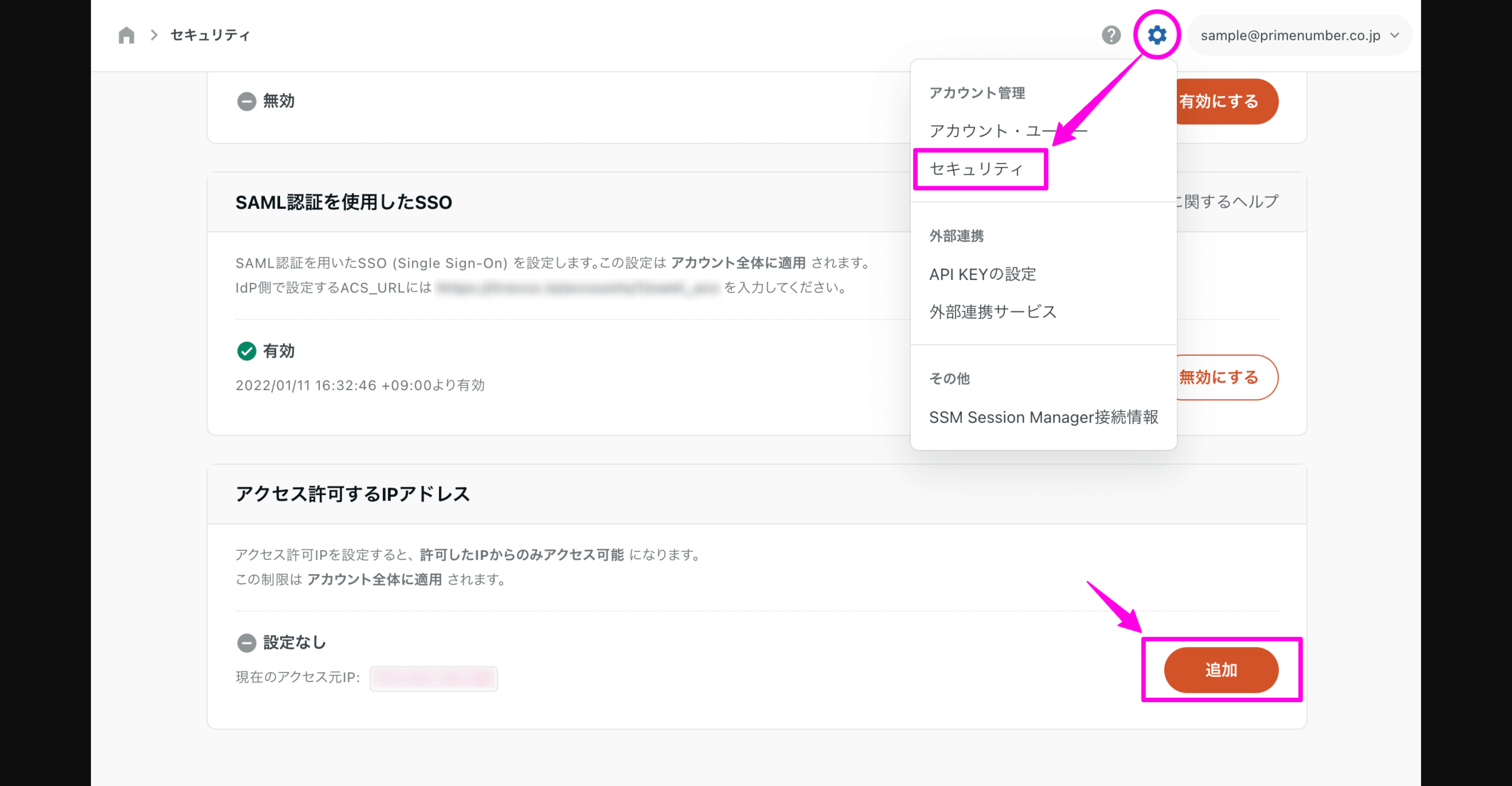

Allowed IP Addresses to be specified in CIDR format

Allowed IP Addresses can now be specified in CIDR format (a writing style in which the IP address and subnet mask are expressed simultaneously).

For example, if you enter 192.0.2.0/24, access is allowed from``192.0.2.0 to``192.0.2.255.

Click the AddAllowed IPAddress button on the Security screen to go to the Add Allowed IP Address screen.

Other

- Filter button in ETL Configuration list changed to a fixed position so that it is always displayed on the screen 🎉.

- dbt Job Setting in Google BigQuery supports selective location input 🎉.

**The contents of this release are described above. **

**Please feel free to contact our Customer Success Representative if you have a release you are interested in. **

Happy Data Engineering!